Gödel, Escher, Bach: An Eternal Golden Braid (60 page)

Read Gödel, Escher, Bach: An Eternal Golden Braid Online

Authors: Douglas R. Hofstadter

Tags: #Computers, #Art, #Classical, #Symmetry, #Bach; Johann Sebastian, #Individual Artists, #Science, #Science & Technology, #Philosophy, #General, #Metamathematics, #Intelligence (AI) & Semantics, #G'odel; Kurt, #Music, #Logic, #Biography & Autobiography, #Mathematics, #Genres & Styles, #Artificial Intelligence, #Escher; M. C

"streets"-they are called "pages". So a given wo addressed by its page number (if memory is paged) together wit position within the page. Hence the "pointer" part of an instruction i numerical address of some word(s) in memory. There are no restric on the pointer, so an instruction may even "point" at itself, so that whet executed, it causes a change in itself to be made.

How does the computer know what instruction to execute at any € time? This is kept track of in the CPU. The CPU has a special pointer w points at (i.e., stores the address of) the next word which is to be inter ed as an instruction. The CPU fetches that word from memory, and c it electronically into a special word belonging to the CPU itself. (Wor the CPU are usually not called "words", but rather, registers.) Then the executes that instruction. Now the instruction may call for any of a number of types of operations to be carried out. Typical ones include:

ADD

the word pointed to in the instruction, to a register.

(In this case, the word pointed to is obviously interpreted as number.)

PRINT

the word pointed to in the instruction, as letters.

(In this case, the word is obviously interpreted

not

as a number, but as a string of letters.)

JUMP

to the word pointed to in the instruction.

(In this case, the

CPU

is being told to interpret that particular word as its next instruction.)

Unless the instruction explicitly dictates otherwise, the

CPU

will pick up the very next word and interpret it as an instruction. In other words, the

CPU

assumes that it should move down the "street" sequentially, like a mailman, interpreting word after word as an instruction. But this sequential order can be broken by such instructions as the

JUMP

instruction, and others.

Machine Language

vs

. Assembly language

This is a very brief sketch of

machine language

. In this language, the types of operations which exist constitute a finite repertoire which cannot be extended.

Thus all programs, no matter how large and complex, must be made out of compounds of these types of instructions. Looking at a program written in machine language is vaguely comparable to looking at a

DNA

molecule atom by atom. If you glance back to Fig. 41, showing the nucleotide sequence of a

DNA

molecule--and then if you consider that each nucleotide contains two dozen atoms or so-and if you imagine trying to write the

DNA

, atom by atom, for a small virus (not to mention a human being!)-then you will get a feeling for what it is like to write a complex program in machine language, and what it is like to try to grasp what is going on in a program if you have access only to its machine language description.

,

It must be mentioned, however, that computer programming was originally done on an even lower level, if possible, than that of machine language-

-namely, connecting wires to each other, so that the proper operations were "hard-wired" in. This is so amazingly primitive by modern standards that it is painful even to' imagine. Yet undoubtedly the people who first did it experienced as much exhilaration as the pioneers of modern computers ever do .. .

We now wish to move to a higher level of the hierarchy of levels of description of programs. This is the

assembly language

level. There is not a gigantic spread between assembly language and machine language; indeed, the step is rather gentle. In essence, there is a one-to-one correspondence between assembly language instructions and machine language instructions. The idea of assembly language is to "chunk" the individual machine language instructions, so that instead of writing the sequence of bits "010111000" when you want an instruction which adds one number to another, you simply write

ADD

, and then instead of giving the address in binary representation, you can refer to the word in memory by a

name

.

Therefore, a program in assembly language is very much like a machine language program made legible to humans. You might compare the machine language version of a program to a

TNT

-derivation done in the obscure Gödel-numbered notation, and the assembly language version to the isomorphic

TNT

-derivation, done in the original

TNT

-notation, which is much easier to understand. Or, going back to the

DNA

image, we can liken the difference between machine language and assembly language to the difference between painfully specifying each nucleotide, atom by atom, and specifying a nucleotide by simply giving its

name

(i.e.,

'A', 'G', 'C', or 'T'

). There is a tremendous saving of labor in this very simple "chunking" operation, although conceptually not much has been changed.

Programs That Translate Programs

Perhaps the central point about assembly language is not its differences from machine language, which are not that enormous, but just the key idea that programs could be written on a different level

at all

! Just think about it: the hardware is built to "understand" machine language programs-sequences of bits-but not letters and decimal numbers. What happens when hardware is fed a program in assembly language% It is as if you tried to get a cell to accept a piece of paper with the nucleotide sequence written out in letters of the alphabet, instead of in chemicals. What can a cell do with a piece of paper? What can a computer do with an assembly language program?

And here is the vital point: someone can write, in machine language, a

translation program

. This program, called an

assembler

, accepts mnemonic instruction names, decimal numbers, and other convenient abbreviations which a programmer can remember easily, and carries out the conversion into the monotonous but critical bit-sequences. After the assembly language program has been

assembled

(i.e., translated), it is

run

-or rather, its machine language equivalent is run. But this is a matter of terminology. Which level program is running? You can never go wrong if you say that the machine language program is running, for hardware is always involved when any program runs-but it is also quite reasonable to think of the running program in terms of assembly language.

For instance, you might very well say, "Right now, the

CPU

is executing a

JUMP

instruction", instead of saying, "Right now, the

CPU

is executing a ' 1 11010000'

instruction". A pianist who plays the notes

G-E-B E-G-B

is also playing an arpeggio in the chord of E minor. There is no reason to be reluctant about describing things from a higher-level point of view. So one can think of the assembly language program running concurrently with the machine language program. We have two modes of describing what the

CPU

is doing.

Higher-Level Languages, Compilers, and Interpreters

The next level of the hierarchy carries much further the extremely powerful idea of using the computer itself to translate programs from a high level into lower levels. After people had programmed in assembly language for a number of years, in the early 1950's, they realized that there were a number of characteristic structures which kept reappearing in program after program. There seemed to be, just as in chess, certain fundamental patterns which cropped up naturally when human beings tried to formulate algorithms--exact descriptions of processes they wanted carried out. In other words, algorithms seemed to have certain higher-level components, in terms of which they could be much more easily and esthetically specified than in the very restricted machine language, or assembly language. Typically, a high-level algorithm component consists not of one or two machine language instructions, but of a whole collection of them, not necessarily all contiguous in memory. Such a component could be represented in a higher-level language by a single item-a chunk.

Aside from standard chunks-the newly discovered components out of which all algorithms can be built-people realized that almost all programs contain even larger chunks-superchunks, so to speak. These superchunks differ from program to program, depending on the kinds of high-level tasks the j program is supposed to carry out. We discussed superchunks in Chapter V, calling them by their usual names: "subroutines" and "procedures". It was clear that a most powerful addition to any programming language would be the ability to define new higher-level entities in terms of previously known ones, and then to call them by name. This would build the chunking operation right into the language. Instead of there being a determinate repertoire of instructions out of which all programs had to be explicitly assembled, the programmer could construct his own modules, each with its own name, each usable anywhere inside the program, just as if it had been a built-in feature of the language. Of course, there is no getting away from the fact that down below, on a machine language level, everything would still be composed of the same old machine language instructions, but that would not be explicitly visible to the highlevel programmer; it would be implicit.

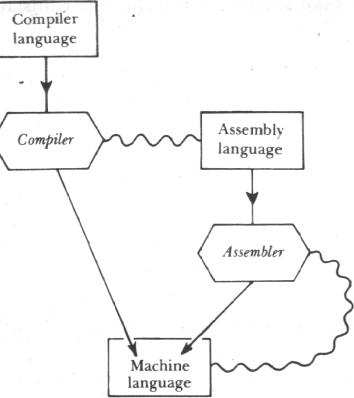

The new languages based on these ideas were called compiler languages.

One of the earliest and most elegant was called "Algol", for "Algorithmic Language". Unlike the case with assembly language, there is no straightforward one-to-one correspondence between statements in Algol and machine language instructions. To be sure, there is still a type of mapping from Algol into machine language, but it is far more "scrambled" than that between assembly language and machine language. Roughly speaking, an Algol program is to its machine language translation as a word problem in an elementary algebra text is to the equation it translates into. (Actually, getting from a word problem to an equation is far more complex, but it gives some inkling of the types of "unscrambling" that have to be carried out in translating from a high-level language to a lower-level Ian

guage.) In the mid-1950's, successful programs called compilers were written whose function was to carry out the translation from compiler languages to machine language.

Also, interpreters were invented. Like compilers, interpreters translate from high-level languages into machine language, but instead of translating all the statements first and then executing the machine code, they read one line and'

execute it immediately. This has the advantage that a user need not have written a complete program to use an interpreter. He may invent his program line by line, and test it out as he goes along. Thus, an interpreter is to a compiler as a simultaneous interpreter is to a translator of a written speech. One of the most important and fascinating of all computer languages is

LISP

(standing for "List Processing"), which was invented by John McCarthy around the time Algol was invented. Subsequently,

LISP

has enjoyed great popularity with workers in Artificial Intelligence.

There is one interesting difference between the way interpreters work and compilers work. A compiler takes input (a finished Algol program, for instance) and produces output (a long sequence of machine language instructions). At this point, the compiler has done its duty. The output is then given to the computer to run. By contrast, the interpreter is constantly running while the programmer types in one

LISP

statement after another, and each one gets executed then' and there.

But this doesn't mean that each statement gets first translated, then executed, for then an interpreter would be nothing but a line-by-line compiler. Instead, in an interpreter, the operations of reading a new line, "understanding" it, and executing it are intertwined: they occur simultaneously.

Here is the idea, expanded a little more. Each time a new line of

LISP

is typed in, the interpreter tries to process it. This means that the interpreter jolts into action, and certain (machine language) instructions inside it get executed.

Precisely which ones get executed depends on the

LISP

statement itself, of course. There are many

JUMP

instructions inside the interpreter, so that the new line of

LISP

may cause control to move around in a complex way-forwards, backwards, then forwards again, etc.. Thus, each

LISP

statement gets converted into a "pathway" inside the interpreter, and the act of following that pathway achieves the desired effect.

Sometimes it is helpful to think of the

LISP

statements as mere pieces of data which are fed sequentially to a constantly running machine language program (the

LISP

interpreter). When you think of things this way, you get a different image of the relation between a program written in a higher-level language and the machine which is executing it.

Bootstrapping

Of course a compiler, being itself a program, has to be written in some language.

The first compilers were written in assembly language, rather than machine language, thus taking full advantage of the already ac-