From Eternity to Here (30 page)

The process of dividing up the space of microstates of some particular physical system (gas in a box, a glass of water, the universe) into sets that we label “macroscopically indistinguishable” is known as

coarse-graining

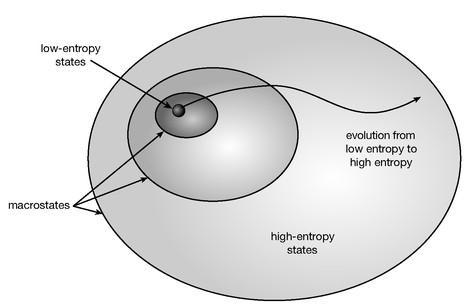

. It’s a little bit of black magic that plays a crucial role in the way we think about entropy. In Figure 45 we’ve portrayed how coarse-graining works; it simply divides up the space of all states of a system into regions (macrostates) that are indistinguishable by macroscopic observations. Every point within one of those regions corresponds to a different microstate, and the entropy associated with a given microstate is proportional to the logarithm of the area (or really volume, as it’s a very high-dimensional space) of the macrostate to which it belongs. This kind of figure makes it especially clear why entropy tends to go up: Starting from a state with low entropy, corresponding to a very tiny part of the space of states, it’s only to be expected that an ordinary system will tend to evolve to states that are located in one of the large-volume, high-entropy regions.

Figure 45 is not to scale; in a real example, the low-entropy macrostates would be much smaller compared to the high-entropy macrostates. As we saw with the divided-box example, the number of microstates corresponding to high-entropy macrostates is enormously larger than the number associated with low-entropy macrostates. Starting with low entropy, it’s certainly no surprise that a system should wander into the roomier high-entropy parts of the space of states; but starting with high entropy, a typical system can wander for a very long time without ever bumping into a low-entropy condition. That’s what equilibrium is like; it’s not that the microstate is truly static, but that it never leaves the high-entropy macrostate it’s in.

Figure 45:

The process of coarse-graining consists of dividing up the space of all possible microstates into regions considered to be macroscopically indistinguishable, which are called macrostates. Each macrostate has an associated entropy, proportional to the logarithm of the volume it takes up in the space of states. The size of the low-entropy regions is exaggerated for clarity; in reality, they are fantastically smaller than the high-entropy regions.

This whole business should strike you as just a little bit funny. Two microstates belong to the same macrostate when they are macroscopically indistinguishable. But that’s just a fancy way of saying, “when we can’t tell the difference between them on the basis of macroscopic observations.” It’s the appearance of “we” in that statement that should make you nervous. Why should our powers of observation be involved in any way at all? We like to think of entropy as a feature of

the world

, not as a feature of

our ability to perceive the world

. Two glasses of water are in the same macrostate if they have the same temperature throughout the glass, even if the exact distribution of positions and momenta of the water molecules are different, because we can’t directly measure all of that information. But what if we ran across a race of superobservant aliens who could peer into a glass of liquid and observe the position and momentum of every molecule? Would such a race think that there was no such thing as entropy?

There are several different answers to these questions, none of which is found satisfactory by everyone working in the field of statistical mechanics. (If any of them were, you would need only that one answer.) Let’s look at two of them.

The first answer is, it really doesn’t matter. That is, it might matter a lot to you how you bundle up microstates into macrostates for the purposes of the particular physical situation in front of you, but it ultimately doesn’t matter if all we want to do is argue for the validity of something like the Second Law. From Figure 45, it’s clear why the Second Law should hold: There is a lot more room corresponding to high-entropy states than to low-entropy ones, so if we start in the latter it is natural to wander into the former. But that will hold true no matter how we actually do the coarse-graining. The Second Law is robust; it depends on the definition of entropy as the logarithm of a volume within the space of states, but not on the precise way in which we choose that volume. Nevertheless, in practice we do make certain choices and not others, so this transparent attempt to avoid the issue is not completely satisfying.

The second answer is that the choice of how to coarse-grain is not

completely

arbitrary and socially constructed, even if some amount of human choice does come into the matter. The fact is, we coarse-grain in ways that seem physically natural, not just chosen at whim. For example, when we keep track of the temperature and pressure in a glass of water, what we’re really doing is throwing away all information that we could measure only by looking through a microscope. We’re looking at average properties within relatively small regions of space because that’s what our senses are actually able to do. Once we choose to do that, we are left with a fairly well-defined set of macroscopically observable quantities.

Averaging within small regions of space isn’t a procedure that we hit upon randomly, nor is it a peculiarity of our human senses as opposed to the senses of a hypothetical alien; it’s a very natural thing, given how the laws of physics work.

135

When I look at cups of coffee and distinguish between cases where a teaspoon of milk has just been added and ones where the milk has become thoroughly mixed, I’m not pulling a random coarse-graining of the states of the coffee out of my hat; that’s how the coffee

looks

to me, immediately and phenomenologically. So even though in principle our choice of how to coarse-grain microstates into macrostates seems absolutely arbitrary, in practice Nature hands us a very sensible way to do it.

RUNNING ENTROPY BACKWARD

A remarkable consequence of Boltzmann’s statistical definition of entropy is that the Second Law is not absolute—it just describes behavior that is overwhelmingly likely. If we start with a medium-entropy macrostate, almost all microstates within it will evolve toward higher entropy in the future, but a small number will actually evolve toward lower entropy.

It’s easy to construct an explicit example. Consider a box of gas, in which the gas molecules all happened to be bunched together in the middle of the box in a lo w-entropy configuration. If we just let it evolve, the molecules will move around, colliding with one another and with the walls of the box, and ending up (with overwhelming probability) in a much higher-entropy configuration.

Now consider a particular microstate of the above box of gas at some moment after it has become high-entropy. From there, construct a new state by keeping all of the molecules at exactly the same positions, but precisely reversing all of the velocities. The resulting state still has a high entropy—it’s contained within the same macrostate as we started with. (If someone suddenly reversed the direction of motion of every single molecule of air around you, you’d never notice; on average there are equal numbers moving in every direction.) Starting in this state, the motion of the molecules will exactly retrace the path that they took from the previous low-entropy state. To an external observer, it will look as if the entropy is spontaneously decreasing. The fraction of high-entropy states that have this peculiar property is astronomically small, but they certainly exist.

Figure 46:

On the top row, ordinary evolution of molecules in a box from a low-entropy initial state to a high-entropy final state. At the bottom, we carefully reverse the momentum of every particle in the final state from the top, to obtain a time-reversed evolution in which entropy decreases.

We could even imagine an entire universe that was like that, if we believe that the fundamental laws are reversible. Take our universe today: It is described by some particular microstate, which we don’t know, although we know something about the macrostate to which it belongs. Now simply reverse the momentum of every single particle in the universe and, moreover, do whatever extra transformations (changing particles to antiparticles, for example) are needed to maintain the integrity of time reversal. Then let it go. What we would see would be an evolution toward the “future” in which the universe collapsed, stars and planets unformed, and entropy generally decreased all around; it would just be the history of our actual universe played backward in time.

However—the thought experiment of an entire universe with a reversed arrow of time is much less interesting than that of some subsystem of the universe with a reversed arrow. The reason is simple: Nobody would ever notice.

In Chapter One we asked what it would be like if time passed more quickly or more slowly. The crucial question there was: Compared to what? The idea that “time suddenly moves more quickly for everyone in the world” isn’t operationally meaningful; we measure time by synchronized repetition, and as long as clocks of all sorts (including biological clocks and the clocks defined by subatomic processes) remain properly synchronized, there’s no way you could tell that the “rate of time” was in any way different. It’s only if some particular clock speeds up or slows down compared to other clocks that the concept makes any sense.

Exactly the same problem is attached to the idea of “time running backward.” When we visualize time going backward, we might imagine some part of the universe running in reverse, like an ice cube spontaneously forming out of a cool glass of water. But if the

whole thing

ran in reverse, it would be precisely the same as it appears now. It would be no different than running the universe forward in time, but choosing some perverse time coordinate that ran in the opposite direction.

The arrow of time isn’t a consequence of the fact that “entropy increases to the future”; it’s a consequence of the fact that “entropy is very different in one direction of time than the other.” If there were some other part of the universe, which didn’t interact with us in any way, where entropy decreased toward what we now call the future, the people living in that reversed-time world wouldn’t notice anything out of the ordinary. They would experience an ordinary arrow of time and claim that entropy was lower in their past (the time of which they have memories) and grew to the future. The difference is that what they

mean

by “the future” is what we call “the past,” and vice versa. The direction of the time coordinate on the universe is completely arbitrary, set by convention; it has no external meaning. The convention we happen to prefer is that “time” increases in the direction that entropy increases. The important thing is that entropy increases in the same temporal direction for everyone within the observable universe, so that they can agree on the direction of the arrow of time.

Of course, everything changes if two people (or other subsets of the physical universe) who can actually communicate and interact with each other disagree on the direction of the arrow of time. Is it possible for my arrow of time to point in a different direction than yours?

THE DECONSTRUCTION OF BENJAMIN BUTTON

We opened Chapter Two with a few examples of incompatible arrows of time in literature—stories featuring some person or thing that seemed to experience time backward. The homunculus narrator of

Time’s Arrow

remembered the future but not the past; the White Queen experienced pain just before she pricked her finger; and the protagonist of F. Scott Fitzgerald’s “The Curious Case of Benjamin Button” grew physically younger as time passed, although his memories and experiences accumulated in the normal way. We now have the tools to explain why none of those things happen in the real world.

As long as the fundamental laws of physics are perfectly reversible, given the precise state of the entire universe (or any closed system) at any one moment in time, we can use those laws to determine what the state will be at any future time, or what it was at any past time. We usually take that time to be the “initial” time, but in principle we could choose any moment—and in the present context, when we’re worried about arrows of time pointing in different directions, there is no time that is initial for everything. So what we want to ask is: Why is it difficult/ impossible to choose a state of the universe with the property that, as we evolve it forward in time, some parts of it have increasing entropy and some parts have decreasing entropy?