The Cosmic Landscape (5 page)

By contrast, the quantum billiard game is unpredictable no matter how hard the players work to make it precise. No amount of precision would allow anything more than statistical predictions of outcomes. The classical billiard player might resort to statistics just because the initial data were imperfectly known or because solving the equations of motion might be too hard. But the quantum player has no choice. The laws of quantum mechanics have an intrinsically random element that can never be eliminated. Why not—why can’t we predict the future from the knowledge of the initial positions and velocities? The answer is the famous Heisenberg Uncertainty Principle.

The Uncertainty Principle describes a fundamental limitation on how well we can determine the positions and velocities simultaneously. It’s the ultimate catch-22. By improving our knowledge of the position of a ball in an effort to improve our predictions, we inevitably lose precision about where the ball will be in the next instant. The Uncertainty Principle is not just a qualitative fact about the behavior of objects. It has a very precise quantitative formulation: the product of the uncertainty of an object’s position and the uncertainty of its momentum

6

is always larger than a certain (very small) number called Planck’s constant.

7

Heisenberg and others after him tried to dream up ways to beat the Uncertainty Principle. Heisenberg’s examples involved electrons, but he might just as well have used billiard balls. Shine a beam of light on a quantum billiard ball. The light that reflects off the ball can be focused on a photographic film, and from the image, the location of the ball can be deduced. But what about the velocity of the ball: how can it be measured? The simplest and most direct way would be to make a second measurement of the position a short time later. Knowing the position at two successive instants, it’s an easy matter to determine the velocity.

Why is this type of experiment not possible? The answer goes back to one of Einstein’s greatest discoveries. Newton had believed that light consisted of particles, but by the beginning of the twentieth century, the particle theory of light had been completely discredited. Many optical effects like interference could be explained only by assuming light to be a wave phenomenon similar to ripples on the surface of water. In the mid-nineteenth century James Clerk Maxwell had produced a highly successful theory that envisioned light as electromagnetic waves that propagate through space in much the same way that sound propagates through air. Thus, it was a radical shock when, in 1905, Albert Einstein proposed that light (and all other electromagnetic radiation) is made out of little bullets called quanta, or photons.

8

In some strange new way, Einstein was proposing that light had all the old wave properties—wavelength, frequency, etc.—but also a graininess, as if it were composed of discrete bits. These quanta are packets of energy that cannot be subdivided, and that fact creates certain limitations when one attempts to form accurate images of small objects.

Let’s begin with the determination of the position. In order to get a good sharp image of the ball, the wavelength of the light must not be too long. The rule is simple: if you want to locate an object to a given accuracy, you must use waves whose wavelengths are no larger than the allowable error. All images are fuzzy to some degree, and limiting the fuzziness means using short wavelengths. This is no problem in classical physics, where the energy of a beam of light can be arbitrarily small. But as Einstein claimed, light is made of indivisible photons. Moreover, as we will see below, the shorter the wavelength of a light ray, the larger is the energy of those photons.

What all of this means is that getting a sharp image that accurately locates the ball requires you to hit it with high-energy photons. But this places severe limitations on the accuracy of subsequent velocity measurements. The trouble is that a high-energy photon will collide with the billiard ball and give it a sharp kick, thus changing the very velocity that we intended to measure. This is an example of the frustration in trying to determine both position and velocity with infinite precision.

The connection between the wavelength of electromagnetic radiation and the energy of photons—the smaller the wavelength, the larger the energy—was one of Einstein’s most important 1905 discoveries. In order of increasing wavelength, the electromagnetic spectrum consists of gamma rays, X-rays, ultraviolet light, visible light, infrared light, microwaves, and radio waves. Radio waves have the longest wavelength, from meters to cosmic dimensions. They are a very poor choice for making precise images of ordinary objects because the images will be no sharper than the wavelength. In a radio image a human being would be indistinguishable from a sack of laundry. In fact it would be impossible to tell one person from two, unless the separation between them was greater than the wavelength of the radio wave. All the images would be blurred fuzz balls. This doesn’t mean that radio waves are never useful for imaging: they are just not good for imaging small objects. Radio astronomy is a very powerful method for studying large astronomical objects. By contrast, gamma rays are best for getting information about really small things such as nuclei. They have the smallest wavelengths, a good deal smaller than the size of a single atom.

On the other hand, the energy of a single photon increases as the wavelength decreases. Individual radio photons are far too feeble to detect. Photons of visible light are more energetic: one visible photon is enough to break up a molecule. To an eye that has been accustomed to the dark, a single photon of visible-wavelength light is just barely enough to activate a retinal rod. Ultraviolet and X-ray photons have enough energy to easily kick electrons out of atoms, and gamma rays can break up not only nuclei, but even protons and neutrons.

This inverse relation between wavelength and energy explains one of the all-pervasive trends in twentieth-century physics: the quest for bigger and bigger accelerators. Physicists, trying to uncover the smallest constituents of matter (molecules, atoms, nuclei, quarks, etc.) were naturally led to ever-smaller wavelengths to get clear images of these objects. But smaller wavelengths inevitably meant higher-energy quanta. In order to create such high-energy quanta, particles had to be accelerated to enormous kinetic energies. For example, electrons can be accelerated to huge energies, but only by machines of increasing size and power. The Stanford Linear Accelerator Center (SLAC) near where I live can accelerate electrons to energies 200,000 times their mass. But this requires a machine about two miles long. SLAC is essentially a two-mile microscope that can resolve objects a thousand times smaller than a proton.

Throughout the twentieth century many unsuspected things were discovered as physicists probed to smaller and smaller distances. One of the most dramatic was that protons and neutrons are not at all elementary particles. By hitting them with high-energy particles, it became possible to discern the tiny components—quarks—that make up the proton and neutron. But even with the highest-energy (shortest-wavelength) probes, the electron, the photon, and the quark remain, as far as we can tell, pointlike objects. This means that we are unable to detect any structure, size, or internal parts to them. They may as well be infinitely small points of space.

Let’s return to Heisenberg’s Uncertainty Principle and its implications. Picture a single ball on the billiard table. Because the ball is confined to the table by the cushions, we automatically know something about its position in space: the uncertainty of the position is no bigger than the dimensions of the table. The smaller the table, the more accurately we know the position and, therefore, the less certain we can be of the momentum. Thus, if we were to measure the velocity of the ball confined to the table, it would be somewhat random and fluctuating. Even if we removed as much kinetic energy as possible, this residual fluctuation motion could not be eliminated. Brian Greene has used the term

quantum jitters

to describe this motion, and I will follow his lead.

9

The kinetic energy associated with the quantum jitters is called

zero-point energy,

and it cannot be eliminated.

The quantum jitters implied by the Uncertainty Principle have an interesting consequence for ordinary matter as we try to cool it to zero temperature. Heat is, of course, the energy of random molecular motion. In classical physics, as a system is cooled, the molecules eventually come to rest at absolute zero temperature. The result: at absolute zero all the kinetic energy of the molecules is eliminated.

But each molecule in a solid has a fairly well-defined location. It is held in place, not by billiard table cushions, but by the other molecules. The result is that the molecules necessarily have a fluctuating velocity. In a real material subject to the laws of quantum mechanics, the molecular kinetic energy can never be totally removed, even at absolute zero!

Position and velocity are by no means unique in having an Uncertainty Principle. There are many pairs of so-called conjugate quantities that cannot be determined simultaneously: the better one is fixed, the more the other fluctuates. A very important example is

energy-time uncertainty principle:

it is impossible to determine both the exact time that an event takes place and the exact energy of the objects that are involved. Suppose an experimental physicist wished to collide two particles at a particular instant of time. The energy-time uncertainty principle limits the precision with which she can control the energy of the particles and also the time at which they hit each other. Controlling the energy with increasing precision inevitably leads to increasing randomness in the time of collision—and vice versa.

Another important example that will come up in chapter 2 involves the electric and magnetic fields at a point of space. These fields, which will play a key role in subsequent chapters, are invisible influences that fill space and control the forces on electrically charged particles. Electric and magnetic fields, like position and velocity, cannot be simultaneously determined. If one is known, the other is necessarily uncertain. For this reason the fields are in a constant state of jittering fluctuation that cannot be eliminated. And, as you might expect, this leads to a certain amount of energy, even in absolutely empty space. This

vacuum energy

has led to one of the greatest paradoxes of modern physics and cosmology. We will come back to it many times, beginning with the next chapter.

Uncertainty and jitters are not the whole story. Quantum mechanics has another side to it: the quantum side. The word

quantum

implies a certain degree of discreteness or graininess in nature. Photons, the units of energy that comprise light waves, are only one example of quanta. Electromagnetic radiation is an oscillatory phenomenon; in other words, it is a vibration. A child on a swing, a vibrating spring, a plucked violin string, a sound wave: all are also oscillatory phenomena, and they all share the property of discreteness. In each case the energy comes in discrete quantum units that can’t be subdivided. In the macroscopic world of springs and swings, the quantum unit of energy is so small that it seems to us that the energy can be anything. But, in fact, the energy of an oscillation comes in indivisible units equal to the frequency of the oscillation (number of oscillations per second) times Planck’s very small constant.

The electrons in an atom, as they sweep around the nucleus, also oscillate. In this case the quantization of energy is described by imagining discrete orbits. Niels Bohr, the father of the quantized atom, imagined the electrons orbiting as if they were constrained to move in separate lanes on a running track. The energy of an electron is determined by which lane it occupies.

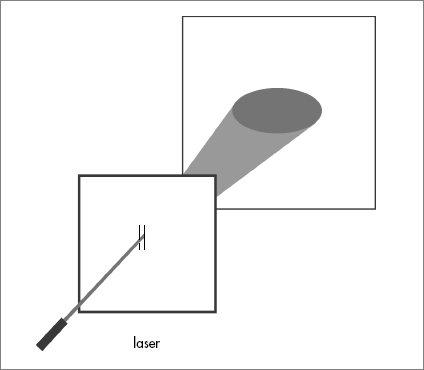

Jittery behavior and discreteness are weird enough, but the real weirdness of the quantum world involves “interference.” The famous “two-slit experiment” illustrates this remarkable phenomenon. Imagine a tiny source of light—a very intense miniature lightbulb—in an otherwise dark room. A laser beam will also do. At some distance a photographic film has been placed. When light from the source falls on the film, it blackens it in the same way that an ordinary photographic “negative” is produced. Obviously if an opaque obstacle such as a sheet of metal is placed between the source and the film, the film will be protected and will be unblackened. But now cut two parallel vertical slits in the sheet metal so that light can pass through them and affect the film. Our first experiment is very simple: block one slit—say, the left one—and turn on the source.

After a suitable time a horizontal broad band of blackened film will appear: a fuzzy image of the right slit. Next, let’s close the right slit and open the left one. A second broad band, partially overlapping the first, will appear.

Now start over with fresh, unexposed film, but this time open both slits. If you don’t know in advance what to expect, the result may amaze you. The pattern is not just the sum of the previous two blackened regions. Instead, we find a series of narrow dark and light bands, like the stripes of a zebra, replacing the two fuzzier bands. There are unblackened bands where previously the original dark bands overlapped. Somehow the light going through the left and right slits cancels in these places. The technical term is

destructive interference,

and it is a well-known property of waves. Another example of it is the “beats” that you hear when two almost identical notes are played.