Oracle Essentials Oracle Database 11g (10 page)

Read Oracle Essentials Oracle Database 11g Online

Authors: Rick Greenwald

The database block size in bytes (e.g., 4 KB = 4,096)

Databases and Instances

|

37

PROCESSES

The maximum number of concurrent database operating system processes

SESSIONS

The maximum number of database sessions

OPEN_CURSORS

The maximum number of database open cursors

SHARED_SERVERS

The minimum number of database shared servers

REMOTE_LISTENER

The remote listener name

COMPATIBLE

The database version youwant for compatibility where features affect file format (e.g., 11.1.0, 10.0.0)

MEMORY_TARGET

The target memory size that is automatically allocated to SGA and instance PGA components

DDL_LOCK_TIMEOUT

For data definition language (DDL) statements, the time a DDL statement waits for an exclusive lock (in seconds) before failing

NLS_LANGUAGE

The National Language Support (NLS) language specified for the database

NLS_TERRITORY

The National Language Support territory specified for the database As an example in the shift toward automation, in Oracle Database 11

g

, the UNDO_

MANAGEMENT parameter default is now set to automatic undo management.

Undo is used in the rollback of transactions, and for database recovery, read consistency, and flashback features. (Redo records, though, reside in the physical redo logs; they store changes to data segments and undo segment data blocks, and they hold a transaction table of the undo segments.) The undo retention period is now self-tuned by Oracle based on how the undo tablespace is configured.

For your database release, check the documentation regarding optional initialization parameters as these change from release to release. Some of them are described in the following sections.

Deploying Physical Components

This section is not a substitute for Oracle’s installation procedures, but it should provide you with some practical guidance as you plan deployment of an Oracle database.

38

|

Chapter 2: Oracle Architecture

Control Files

A database should have at least two control files on different physical disks. Without a current copy of the control file, you run the risk of losing track of portions of your database. Losing control files is not necessarily fatal—there are ways to rebuild them.

However, rebuilding control files can be difficult, introduces risk, and can be easily avoided.

The location of the control files is defined, as previously mentioned, by the CONTROL_FILES initialization parameter. Youcan specify multiple copies of control files by indicating multiple locations in the CONTROL_FILES parameter for the instance, as illustrated here:

control_files = (/u00/oradata/control.001.dbf,

/u01/oradata/control.002.dbf,

/u02/oradata/control.003.dbf)

This parameter tells the instance where to find the control files. Oracle will ensure that all copies of the control file are kept in sync so all updates to the control files will occur at the same time. If you do not specify this parameter, Oracle will create a control file using a default filename or by leveraging Oracle Managed Files (if enabled).

Many Oracle databases are deployed on some type of redundant disk solution such as RAID-1 or RAID-5 to avoid data loss when a disk fails. (RAID is covered in more detail in

Chapter 7.)

Youmight conclude that storing the control file on protected disk storage eliminates the need for maintaining multiple copies of control files and that losing a disk won’t mean loss of the control file. But there are two reasons why this is not an appropriate conclusion:

1. If youlose more than one disk in a

striped array

or

mirror-pair

, youwill lose the data on those disks. This type of event is statistically rare, but if it does occur, you could be faced with a damaged or lost control file. As you would have your hands full recovering from the multiple disk failures, you would likely prefer to avoid rebuilding control files during the recovery process. Multiplexing your control files, even when each copy is on redundant disk storage, provides an additional level of physical security.

2. Redundant disk storage does nothing to protect you from the perpetual threat of human error. Someone could inadvertently delete or rename a control file, copy another file over it, or move it. A mirrored disk will faithfully mirror these actions, and multiplexed control files will leave you with one or more surviving copies of the control file when one of the copies is damaged or lost.

Youdo not need to be concerned with additional performance impact when writing to multiple control files. Updates to the control files are insignificant compared to other disk I/O that occurs in an Oracle environment.

Deploying Physical Components

|

39

Datafiles

Datafiles contain the actual data stored in the database, the tables and indexes that store data, the data dictionary that maintains information about these data structures, and the rollback segments used to implement concurrency.

A datafile is composed of Oracle database blocks that, in turn, are composed of operating system blocks on a disk. Oracle block sizes range from 2 KB to 32 KB. Prior to Oracle9

i

, only a single block size could be present in the entire database. In versions of the database since the introduction of Oracle9

i

, youstill set a default block size for the database, but you can also have up to five other block sizes in a database (though only a single block size for each tablespace).

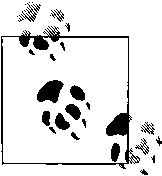

Figure 2-4

illustrates the relationship of Oracle blocks to operating system blocks.

data_01.dbf

Oracle

Blocks

Operating System Blocks

Datafile

data_01.dbf

consists of Oracle blocks.

Each Oracle block consists of four operating system blocks.

Figure 2-4. Oracle blocks and operating system blocks

Datafiles belong to only one database and to only one tablespace within that database. Data is read in units of Oracle blocks from the datafiles into memory as needed, based on the work users are doing. Blocks of data are written from memory to the datafiles stored on disk as needed to ensure that the database reliably records changes made by users.

Datafiles are the lowest level of granularity between an Oracle database and the operating system. When you lay a database out on the I/O subsystem, the smallest piece you place in any location is a datafile. Tuning the I/O subsystem to improve Oracle performance typically involves moving datafiles from one set of disks to another.

Automatic Storage Management, included in Oracle databases since Oracle Database 10

g

, provides automatic striping and eliminates manual effort in this tuning task.

40

|

Chapter 2: Oracle Architecture

Setting the Database Block Size

Prior to Oracle9

i

, youset the database block size for an Oracle database at the time you created the database, and you couldn’t change it without re-creating the database.

Since Oracle9

i

, youhave more flexibility, since youcan have multiple block sizes in the same database. In all versions, the default block size for the database is set using the DB_BLOCK_SIZE instance initialization parameter.

How do youchoose an appropriate block size for an Oracle database? Oracle defaults to a block size based on the operating system used, but understanding the implications of the block size can help youdetermine a more appropriate setting for your workload.

The block size is the minimum amount of data that can be read or written at one time.

In online transaction processing (OLTP) systems, a transaction typically involves a relatively small, well-defined set of rows, such as the rows used for placing an order for a set of products for a specific customer. The access to rows in these types of operations tends to be through indexes, as opposed to through a scan of the entire table. Because of this, having smaller blocks (4 KB) might be appropriate. Oracle won’t waste system resources by transferring larger blocks that contain additional data not required by the transaction.

Data warehouses workloads can include reading millions of rows and scans of all the data in a table. For this type of activity, using bigger database blocks enables each block read to deliver more data to the requesting user. To support these types of operations best, data warehouses usually have larger blocks, such as 8 KB or 16 KB. Each I/O

operation might take a little longer due to the larger block size, but the reduced number of operations will end up improving overall performance.

Datafile structure

The first block of each datafile is called the

datafile header

. It contains critical information used to maintain the overall integrity of the database. One of the most critical pieces of information in this header is the

checkpoint structure

. This is a logical timestamp that indicates the last point at which changes were written to the datafile. This timestamp is critical during an Oracle recovery process as the timestamp in the header determines which redo logs to apply in bringing the datafile to the current point in time.

Extents and segments

From a physical point of view, a datafile is stored as operating system blocks. From a logical point of view, datafiles have three intermediate organizational levels: data blocks, extents, and segments. An

extent

is a set of data blocks that are contiguous within an Oracle datafile. A

segment

is an object that takes up space in an Oracle database, such as a table or an index that is composed of one or more extents.

Deploying Physical Components

|

41

When Oracle updates data, it first attempts to update the data in the same data block. If there is not enough room in the data block for the new information, Oracle will write the data to a new data block that could be in a different extent.

For more information on segments and extents and how they affect performance, refer to the section on

“Fragmentation and Reorganization” in Chapter 5.

This discussion is especially important if you are running an older release of Oracle. Oracle Database 10

g

added a Segment Advisor that greatly simplifies reclaiming unused space in current database versions.

Redo Log Files

Redo log files contain a “recording” of the changes made to the database as a result of transactions and internal Oracle activities. Since Oracle usually caches changed blocks in memory, when instance failure occurs, some changed blocks might not have been written out to the datafiles. The recording of the changes in the redo logs can be used to play back the changes lost when the failure occurred, thus protecting transactional consistency.

These files are sometimes confused with rollback buffers supporting

concurrency and described in Chapter 8. They are not the same!

In addition, redo log files are used for “undo” operations when a ROLLBACK statement is issued. Uncommitted changes to the database are rolled back to the database image at the last commit.

Suppressing Redo Logging

By default, Oracle logs all changes made to the database. The generation of redo logs adds a certain amount of overhead. You can suppress redo log generation to speed up specific operations, but doing so means the operation in question won’t be logged in the redo logs and youwill not be able to recover that operation in the event of a failure.

If you do decide to suppress redo logging for certain operations, you would include the NOLOGGING keyword in the SQL statement for the operation. (Note that prior to Oracle8, the keyword was UNRECOVERABLE.) If a failure occurred during the operation, youwould need to repeat the operation. For example, youmight build an index on a table without generating redo information. In the event that a database failure occurs and the database is recovered, the index will not be re-created because it wasn’t logged. You’d simply execute the script originally intended to create the index again.

42

|

Chapter 2: Oracle Architecture

To simplify operations in the event of a failure, we recommend that you always take a backup after an unlogged operation if you cannot afford to lose the object created by the operation or youcannot repeat the operation for some reason. In addition to using the NOLOGGING keyword in certain commands, you can also mark a table or an entire tablespace with the NOLOGGING attribute. This will suppress redo information for all applicable operations on the table or for all tables in the tablespace.

Multiplexing redo log files

Oracle defines specific terminology to describe how it manages redo logs. Each Oracle instance uses a

thread

of redo to record the changes it makes to the database. A thread of redo is composed of redo log groups, which are composed of one or more redo log members.