Superintelligence: Paths, Dangers, Strategies (15 page)

Read Superintelligence: Paths, Dangers, Strategies Online

Authors: Nick Bostrom

Tags: #Science, #Philosophy, #Non-Fiction

Cognitive enhancement via improvements in public health and diet has steeply diminishing returns.

3

Big gains come from eliminating severe nutritional deficiencies, and the most severe deficiencies have already been largely eliminated in all but the poorest countries. Only girth is gained by increasing an already adequate diet. Education, too, is now probably subject to diminishing returns. The fraction of talented individuals in the world who lack access to quality education is still substantial, but declining.

Pharmacological enhancers might deliver some cognitive gains over the coming decades. But after the easiest fixes have been accomplished—perhaps sustainable increases in mental energy and ability to concentrate, along with better control over the rate of long-term memory consolidation—subsequent gains will be increasingly hard to come by. Unlike diet and public health approaches, however, improving cognition through smart drugs might get easier before it gets harder. The field of neuropharmacology still lacks much of the basic knowledge

that would be needed to competently intervene in the healthy brain. Neglect of enhancement medicine as a legitimate area of research may be partially to blame for this current backwardness. If neuroscience and pharmacology continue to progress for a while longer without focusing on cognitive enhancement, then maybe there would be some relatively easy gains to be had when at last the development of nootropics becomes a serious priority.

4

Genetic cognitive enhancement has a U-shaped recalcitrance profile similar to that of nootropics, but with larger potential gains. Recalcitrance starts out high while the only available method is selective breeding sustained over many generations, something that is obviously difficult to accomplish on a globally significant scale. Genetic enhancement will get easier as technology is developed for cheap and effective genetic testing and selection (and particularly when iterated embryo selection becomes feasible in humans). These new techniques will make it possible to tap the pool of existing human genetic variation for intelligence-enhancing alleles. As the best existing alleles get incorporated into genetic enhancement packages, however, further gains will get harder to come by. The need for more innovative approaches to genetic modification may then increase recalcitrance. There are limits to how quickly things can progress along the genetic enhancement path, most notably the fact that germline interventions are subject to an inevitable maturational lag: this strongly counteracts the possibility of a fast or moderate takeoff.

5

That embryo selection can only be applied in the context of in vitro fertilization will slow its rate of adoption: another limiting factor.

The recalcitrance along the brain–computer path seems initially very high. In the unlikely event that it somehow becomes easy to insert brain implants and to achieve high-level functional integration with the cortex, recalcitrance might plummet. In the long run, the difficulty of making progress along this path would be similar to that involved in improving emulations or AIs, since the bulk of the brain–computer system’s intelligence would eventually reside in the computer part.

The recalcitrance for making networks and organizations

in general

more efficient is high. A vast amount of effort is going into overcoming this recalcitrance, and the result is an annual improvement of humanity’s total capacity by perhaps no more than a couple of percent.

6

Furthermore, shifts in the internal and external environment mean that organizations, even if efficient at one time, soon become ill-adapted to their new circumstances. Ongoing reform effort is thus required even just to prevent deterioration. A step change in the rate of gain in average organizational efficiency is perhaps conceivable, but it is hard to see how even the most radical scenario of this kind could produce anything faster than a slow takeoff, since organizations operated by humans are confined to work on human timescales. The Internet continues to be an exciting frontier with many opportunities for enhancing collective intelligence, with a recalcitrance that seems at the moment to be in the moderate range—progress is somewhat swift but a lot of effort is going into making this progress happen. It may be expected to increase as low-hanging fruits (such as search engines and email) are depleted.

The difficulty of advancing toward whole brain emulation is difficult to estimate. Yet we can point to a specific future milestone: the successful emulation of an insect brain. That milestone stands on a hill, and its conquest would bring into view much of the terrain ahead, allowing us to make a decent guess at the recalcitrance of scaling up the technology to human whole brain emulation. (A successful emulation of a small-mammal brain, such as that of a mouse, would give an even better vantage point that would allow the distance remaining to a human whole brain emulation to be estimated with a high degree of precision.) The path toward artificial intelligence, by contrast, may feature no such obvious milestone or early observation point. It is entirely possible that the quest for artificial intelligence will appear to be lost in dense jungle until an unexpected breakthrough reveals the finishing line in a clearing just a few short steps away.

Recall the distinction between these two questions: How hard is it to attain roughly human levels of cognitive ability? And how hard is it to get from there to superhuman levels? The first question is mainly relevant for predicting how long it will be before the onset of a takeoff. It is the second question that is key to assessing the shape of the takeoff, which is our aim here. And though it might be tempting to suppose that the step from human level to superhuman level must be the harder one—this step, after all, takes place “at a higher altitude” where capacity must be superadded to an already quite capable system—this would be a very unsafe assumption. It is quite possible that recalcitrance

falls

when a machine reaches human parity.

Consider first whole brain emulation. The difficulties involved in creating the first human emulation are of a quite different kind from those involved in enhancing an existing emulation. Creating a first emulation involves huge technological challenges, particularly in regard to developing the requisite scanning and image interpretation capabilities. This step might also require considerable amounts of physical capital—an industrial-scale machine park with hundreds of high-throughput scanning machines is not implausible. By contrast, enhancing the quality of an existing emulation involves tweaking algorithms and data structures: essentially a software problem, and one that could turn out to be much easier than perfecting the imaging technology needed to create the original template. Programmers could easily experiment with tricks like increasing the neuron count in different cortical areas to see how it affects performance.

7

They also could work on code optimization and on finding simpler computational models that preserve the essential functionality of individual neurons or small networks of neurons. If the last technological prerequisite to fall into place is either scanning or translation, with computing power being relatively abundant, then not much attention might have been given during the development phase to implementational efficiency, and easy opportunities for computational efficiency savings might be available. (More fundamental architectural reorganization might also be possible, but that takes us off the emulation path and into AI territory.)

Another way to improve the code base once the first emulation has been produced is to scan additional brains with different or superior skills and talents. Productivity growth would also occur as a consequence of adapting organizational structures and workflows to the unique attributes of digital minds. Since there is no precedent in the human economy of a worker who can be literally copied, reset, run at different speeds, and so forth, managers of the first emulation cohort would find plenty of room for innovation in managerial practices.

After initially plummeting when human whole brain emulation becomes possible, recalcitrance may rise again. Sooner or later, the most glaring implementational inefficiencies will have been optimized away, the most promising algorithmic variations will have been tested, and the easiest opportunities for organizational innovation will have been exploited. The template library will have expanded so that acquiring more brain scans would add little benefit over working with existing templates. Since a template can be multiplied, each copy can be individually trained in a different field, and this can be done at electronic speed, it might be that the number of brains that would need to be scanned in order to capture most of the potential economic gains is small. Possibly a single brain would suffice.

Another potential cause of escalating recalcitrance is the possibility that emulations or their biological supporters will organize to support regulations restricting the use of emulation workers, limiting emulation copying, prohibiting certain kinds of experimentation with digital minds, instituting workers’ rights and a minimum wage for emulations, and so forth. It is equally possible, however, that political developments would go in the opposite direction, contributing to a fall in recalcitrance. This might happen if initial restraint in the use of emulation labor gives way to unfettered exploitation as competition heats up and the economic and strategic costs of occupying the moral high ground become clear.

As for artificial intelligence (non-emulation machine intelligence), the difficulty of lifting a system from human-level to superhuman intelligence by means of algorithmic improvements depends on the attributes of the particular system. Different architectures might have very different recalcitrance.

In some situations, recalcitrance could be extremely low. For example, if human-level AI is delayed because one key insight long eludes programmers, then when the final breakthrough occurs, the AI might leapfrog from below to radically above human level without even touching the intermediary rungs. Another situation in which recalcitrance could turn out to be extremely low is that of an AI system that can achieve intelligent capability via two different modes of processing. To illustrate this possibility, suppose an AI is composed of two subsystems, one possessing domain-specific problem-solving techniques, the other possessing general-purpose reasoning ability. It could then be the case that while the second subsystem remains below a certain capacity threshold, it contributes nothing to the system’s overall performance, because the solutions it generates are always inferior to those generated by the domain-specific subsystem. Suppose now that a small amount of optimization power is applied to the general-purpose subsystem

and that this produces a brisk rise in the capacity of that subsystem. At first, we observe no increase in the overall system’s performance, indicating that recalcitrance is high. Then, once the capacity of the general-purpose subsystem crosses the threshold where its solutions start to beat those of the domain-specific subsystem, the overall system’s performance suddenly begins to improve at the same brisk pace as the general-purpose subsystem, even as the amount of optimization power applied stays constant: the system’s recalcitrance has plummeted.

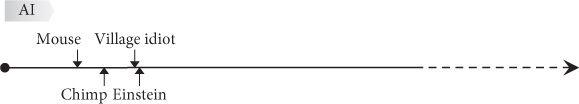

It is also possible that our natural tendency to view intelligence from an anthropocentric perspective will lead us to underestimate improvements in sub-human systems, and thus to overestimate recalcitrance. Eliezer Yudkowsky, an AI theorist who has written extensively on the future of machine intelligence, puts the point as follows:

AI might make an

apparently

sharp jump in intelligence purely as the result of anthropomorphism, the human tendency to think of “village idiot” and “Einstein” as the extreme ends of the intelligence scale, instead of nearly indistinguishable points on the scale of minds-in-general. Everything dumber than a dumb human may appear to us as simply “dumb”. One imagines the “AI arrow” creeping steadily up the scale of intelligence, moving past mice and chimpanzees, with AIs still remaining “dumb” because AIs cannot speak fluent language or write science papers, and then the AI arrow crosses the tiny gap from infra-idiot to ultra-Einstein in the course of one month or some similarly short period.

8

(See

Fig. 8

.)

The upshot of these several considerations is that it is difficult to predict how hard it will be to make algorithmic improvements in the first AI that reaches a roughly human level of general intelligence. There are at least some possible circumstances in which algorithm-recalcitrance is low. But even if algorithm-recalcitrance is very high, this would not preclude the overall recalcitrance of the AI in question from being low. For it might be easy to increase the intelligence of the system in other ways than by improving its algorithms. There are two other factors that can be improved: content and hardware.

First, consider content improvements. By “content” we here mean those parts of a system’s software assets that do not make up its core algorithmic architecture. Content might include, for example, databases of stored percepts, specialized skills

libraries, and inventories of declarative knowledge. For many kinds of system, the distinction between algorithmic architecture and content is very unsharp; nevertheless, it will serve as a rough-and-ready way of pointing to one potentially important source of capability gains in a machine intelligence. An alternative way of expressing much the same idea is by saying that a system’s intellectual problem-solving capacity can be enhanced not only by making the system cleverer but also by expanding what the system knows.