Superintelligence: Paths, Dangers, Strategies (17 page)

Read Superintelligence: Paths, Dangers, Strategies Online

Authors: Nick Bostrom

Tags: #Science, #Philosophy, #Non-Fiction

Solving this simple differential equation yields the exponential function

But recalcitrance being constant is a rather special case. Recalcitrance might well decline around the human baseline, due to one or more of the factors mentioned in the previous subsection, and remain low around the crossover and some distance beyond (perhaps until the system eventually approaches fundamental physical limits). For example, suppose that the optimization power applied to the system is roughly constant (i.e. +

+ ≈

≈

c

) prior to the system becoming capable of contributing substantially to its own design, and that this leads to the system doubling in capacity every 18 months. (This would be roughly in line with historical improvement rates from Moore’s law combined with software advances.

23

) This rate of improvement, if achieved by means of roughly constant optimization power, entails recalcitrance declining as the inverse of the system power:

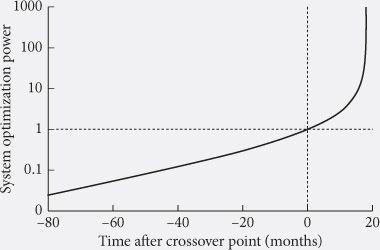

If recalcitrance continues to fall along this hyperbolic pattern, then when the AI reaches the crossover point the total amount of optimization power applied to improving the AI has doubled. We then have

The next doubling occurs 7.5 months later. Within 17.9 months, the system’s capacity has grown a thousandfold, thus obtaining speed superintelligence (

Figure 9

).

This particular growth trajectory has a positive singularity at

t

= 18 months. In reality, the assumption that recalcitrance is constant would cease to hold as the system began to approach the physical limits to information processing, if not sooner.

These two scenarios are intended for illustration only; many other trajectories are possible, depending on the shape of the recalcitrance curve. The claim is simply that the strong feedback loop that sets in around the crossover point tends strongly to make the takeoff faster than it would otherwise have been.

Figure 9

One simple model of an intelligence explosion.

These observations notwithstanding, the shape of the recalcitrance curve in the relevant region is not yet well characterized. In particular, it is unclear how difficult it would be to improve the software quality of a human-level emulation or AI. The difficulty of expanding the hardware power available to a system is also not clear. Whereas today it would be relatively easy to increase the computing power available to a small project by spending a thousand times more on computing power or by waiting a few years for the price of computers to fall, it is possible that the first machine intelligence to reach the human baseline will result from a large project involving pricey supercomputers, which cannot be cheaply scaled, and that Moore’s law will by then have expired. For these reasons, although a fast or medium takeoff looks more likely, the possibility of a slow takeoff cannot be excluded.

25

Decisive strategic advantage

A question distinct from, but related to, the question of kinetics is whether there will there be one superintelligent power or many? Might an intelligence explosion propel one project so far ahead of all others as to make it able to dictate the future? Or will progress be more uniform, unfurling across a wide front, with many projects participating but none securing an overwhelming and permanent lead?

The preceding chapter analyzed one key parameter in determining the size of the gap that might plausibly open up between a leading power and its nearest competitors—namely, the speed of the transition from human to strongly superhuman intelligence. This suggests a first-cut analysis. If the takeoff is

fast

(completed over the course of hours, days, or weeks) then it is unlikely that two independent projects would be taking off concurrently: almost certainty, the first project would have completed its takeoff before any other project would have started its own. If the takeoff is

slow

(stretching over many years or decades) then there could plausibly be multiple projects undergoing takeoffs concurrently, so that although the projects would by the end of the transition have gained enormously in capability, there would be no time at which any project was far enough ahead of the others to give it an overwhelming lead. A takeoff of

moderate

speed is poised in between, with either condition a possibility: there might or might not be more than one project undergoing the takeoff at the same time.

1

Will one machine intelligence project get so far ahead of the competition that it gets a

decisive strategic advantage

—that is, a level of technological and other advantages sufficient to enable it to achieve complete world domination? If a project did obtain a decisive strategic advantage, would it use it to suppress competitors and form a

singleton

(a world order in which there is at the global level a single decision-making agency)? And if there is a winning project, how “large” would

it be—not in terms of physical size or budget but in terms of how many people’s desires would be controlling its design? We will consider these questions in turn.

One factor influencing the width of the gap between frontrunner and followers is the rate of diffusion of whatever it is that gives the leader a competitive advantage. A frontrunner might find it difficult to gain and maintain a large lead if followers can easily copy the frontrunner’s ideas and innovations. Imitation creates a headwind that disadvantages the leader and benefits laggards, especially if intellectual property is weakly protected. A frontrunner might also be especially vulnerable to expropriation, taxation, or being broken up under anti-monopoly regulation.

It would be a mistake, however, to assume that this headwind must increase monotonically with the gap between frontrunner and followers. Just as a racing cyclist who falls too far behind the competition is no longer shielded from the wind by the cyclists ahead, so a technology follower who lags sufficiently behind the cutting edge might find it hard to assimilate the advances being made at the frontier.

2

The gap in understanding and capability might have grown too large. The leader might have migrated to a more advanced technology platform, making subsequent innovations untransferable to the primitive platforms used by laggards. A sufficiently pre-eminent leader might have the ability to stem information leakage from its research programs and its sensitive installations, or to sabotage its competitors’ efforts to develop their own advanced capabilities.

If the frontrunner is an AI system, it could have attributes that make it easier for it to expand its capabilities while reducing the rate of diffusion. In human-run organizations, economies of scale are counteracted by bureaucratic inefficiencies and agency problems, including difficulties in keeping trade secrets.

3

These problems would presumably limit the growth of a machine intelligence project so long as it is operated by humans. An AI system, however, might avoid some of these scale diseconomies, since the AI’s modules (in contrast to human workers) need not have individual preferences that diverge from those of the system as a whole. Thus, the AI system could avoid a sizeable chunk of the inefficiencies arising from agency problems in human enterprises. The same advantage—having perfectly loyal parts—would also make it easier for an AI system to pursue long-range clandestine goals. An AI would have no disgruntled employees ready to be poached by competitors or bribed into becoming informants.

4

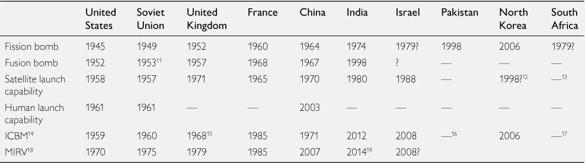

We can get a sense of the distribution of plausible gaps in development times by looking at some historical examples (see

Box 5

). It appears that lags in the range of a few months to a few years are typical of strategically significant technology projects.

Over long historical timescales, there has been an increase in the rate at which knowledge and technology diffuse around the globe. As a result, the temporal gaps between technology leaders and nearest followers have narrowed.

China managed to maintain a monopoly on silk production for over two thousand years. Archeological finds suggest that production might have begun around 3000

BC

, or even earlier.

5

Sericulture was a closely held secret. Revealing the techniques was punishable by death, as was exporting silkworms or their eggs outside China. The Romans, despite the high price commanded by imported silk cloth in their empire, never learnt the art of silk manufacture. Not until around

AD

300 did a Japanese expedition manage to capture some silkworm eggs along with four young Chinese girls, who were forced to divulge the art to their abductors.

6

Byzantium joined the club of producers in

AD

522. The story of porcelain-making also features long lags. The craft was practiced in China during the Tang Dynasty around

AD

600 (and might have been in use as early as

AD

200), but was mastered by Europeans only in the eighteenth century.

7

Wheeled vehicles appeared in several sites across Europe and Mesopotamia around 3500

BC

but reached the Americas only in post-Columbian times.

8

On a grander scale, the human species took tens of thousands of years to spread across most of the globe, the Agricultural Revolution thousands of years, the Industrial Revolution only hundreds of years, and an Information Revolution could be said to have spread globally over the course of decades—though, of course, these transitions are not necessarily of equal profundity. (The

Dance Dance Revolution

video game spread from Japan to Europe and North America in just one year!)

Technological competition has been quite extensively studied, particularly in the contexts of patent races and arms races.

9

It is beyond the scope of our investigation to review this literature here. However, it is instructive to look at some examples of strategically significant technology races in the twentieth century (see

Table 7

).

With regard to these six technologies, which were regarded as strategically important by the rivaling superpowers because of their military or symbolic significance, the gaps between leader and nearest laggard were (very approximately) 49 months, 36 months, 4 months, 1 month, 4 months, and 60 months, respectively—longer than the duration of a fast takeoff and shorter than the duration of a slow takeoff.

10

In many cases, the laggard’s project benefitted from espionage and publicly available information. The mere demonstration of the feasibility of an invention can also encourage others to develop it independently; and fear of falling behind can spur the efforts to catch up.

Perhaps closer to the case of AI are mathematical inventions that do not require the development of new physical infrastructure. Often these are published in the academic literature and can thus be regarded as universally available; but in some cases, when the discovery appears to offer a strategic advantage, publication is delayed. For example, two of the most important ideas in public-key cryptography are the Diffie–Hellman key exchange protocol and the RSA encryption scheme. These were discovered by the academic community in 1976 and 1978, respectively, but it has later been confirmed that they were known by cryptographers at the UK’s communications security group since the early 1970s.

20

Large software projects might offer a closer analogy with AI projects, but it is harder to give crisp examples of typical lags because software is usually rolled out in incremental installments and the functionalities of competing systems are often not directly comparable.

Table 7

Some strategically significant technology races

It is possible that globalization and increased surveillance will reduce typical lags between competing technology projects. Yet there is likely to be a lower bound on how short the average lag could become (in the absence of deliberate coordination).

21

Even absent dynamics that lead to snowball effects, some projects will happen to end up with better research staff, leadership, and infrastructure, or will just stumble upon better ideas. If two projects pursue alternative approaches, one of which turns out to work better, it may take the rival project many months to switch to the superior approach even if it is able to closely monitor what the forerunner is doing.

Combining these observations with our earlier discussion of the speed of the takeoff, we can conclude that it is highly unlikely that two projects would be close enough to undergo a fast takeoff concurrently; for a medium takeoff, it could easily go either way; and for a slow takeoff, it is highly likely that several projects would undergo the process in parallel. But the analysis needs a further step. The key question is not how many projects undergo a takeoff in tandem, but how many projects emerge on the yonder side sufficiently tightly clustered in capability that none of them has a decisive strategic advantage. If the takeoff process is relatively slow to begin and then gets faster, the distance between competing projects would tend to grow. To return to our bicycle metaphor, the situation would be analogous to a pair of cyclists making their way up a steep hill, one trailing some distance behind the other—the gap between them then expanding as the frontrunner reaches the peak and starts accelerating down the other side.

Consider the following medium takeoff scenario. Suppose it takes a project one year to increase its AI’s capability from the human baseline to a strong superintelligence, and that one project enters this takeoff phase with a six-month lead over the next most advanced project. The two projects will be undergoing a takeoff concurrently. It might seem, then, that neither project gets a decisive strategic advantage. But that need not be so. Suppose it takes nine months to advance from the human baseline to the crossover point, and another three months from there to strong superintelligence. The frontrunner then attains strong superintelligence three months before the following project even reaches the crossover point. This

would give the leading project a decisive strategic advantage and the opportunity to parlay its lead into permanent control by disabling the competing projects and establishing a singleton. (Note that the concept of a singleton is an abstract one: a singleton could be democracy, a tyranny, a single dominant AI, a strong set of global norms that include effective provisions for their own enforcement, or even an alien overlord—its defining characteristic being simply that it is some form of agency that can solve all major global coordination problems. It may, but need not, resemble any familiar form of human governance.

22

)

Since there is an especially strong prospect of explosive growth just after the crossover point, when the strong positive feedback loop of optimization power kicks in, a scenario of this kind is a serious possibility, and it increases the chances that the leading project will attain a decisive strategic advantage even if the takeoff is not fast.

Some paths to superintelligence require great resources and are therefore likely to be the preserve of large well-funded projects. Whole brain emulation, for instance, requires many different kinds of expertise and lots of equipment. Biological intelligence enhancements and brain–computer interfaces would also have a large scale factor: while a small biotech firm might invent one or two drugs, achieving superintelligence along one of these paths (if doable at all) would likely require many inventions and many tests, and therefore the backing of an industrial sector or a well-funded national program. Achieving collective superintelligence by making organizations and networks more efficient requires even more extensive input, involving much of the world economy.