Superintelligence: Paths, Dangers, Strategies (46 page)

Read Superintelligence: Paths, Dangers, Strategies Online

Authors: Nick Bostrom

Tags: #Science, #Philosophy, #Non-Fiction

The harder it seems to solve the control problem for artificial intelligence, the more tempting it is to promote the whole brain emulation path as a less risky alternative. There are several issues, however, that must be analyzed before one can arrive at a well-considered judgment.

21

First, there is the issue of technology coupling, already discussed earlier. We pointed out that an effort to develop whole brain emulation could result in neuromorphic AI instead, a form of machine intelligence that may be especially unsafe.

But let us assume, for the sake of argument, that we actually achieve whole brain emulation (WBE). Would this be safer than AI? This, itself, is a complicated issue. There are at least three

putative

advantages of WBE: (i) that its performance characteristics would be better understood than those of AI; (ii) that it would inherit human motives; and (iii) that it would result in a slower takeoff. Let us very briefly reflect on each.

i That it should be easier to understand the intellectual performance characteristics of an emulation than of an AI sounds plausible. We have abundant experience with the strengths and weaknesses of human intelligence but no experience with human-level artificial intelligence. However, to understand what a snapshot of a digitized human intellect can and cannot do is not the same as to understand how such an intellect will respond to modifications aimed at enhancing its performance. An artificial intellect, by contrast, might be carefully designed to be understandable, in both its static and dynamic dispositions. So while whole brain emulation may be more predictable in its intellectual performance than a generic AI at a comparable stage of development, it is unclear whether whole brain emulation would be dynamically more predictable than an AI engineered by competent safety-conscious programmers.

ii As for an emulation inheriting the motivations of its human template, this is far from guaranteed. Capturing human evaluative dispositions might require a very high-fidelity emulation. Even if some individual’s motivations

were

perfectly captured, it is unclear how much safety would be purchased. Humans can be untrustworthy, selfish, and cruel. While templates would hopefully be selected for exceptional virtue, it may be hard to foretell how someone will act when transplanted into radically alien circumstances, superhumanly enhanced in intelligence, and tempted with an opportunity for world domination. It is true

that emulations would at least be more likely to have

human-like

motivations (as opposed to valuing only paperclips or discovering digits of pi). Depending on one’s views on human nature, this might or might not be reassuring.

22

iii It is not clear why whole brain emulation should result in a slower takeoff than artificial intelligence. Perhaps with whole brain emulation one should expect less hardware overhang, since whole brain emulation is less computationally efficient than artificial intelligence can be. Perhaps, also, an AI system could more easily absorb all available computing power into one giant integrated intellect, whereas whole brain emulation would forego quality superintelligence and pull ahead of humanity only in speed and size of population. If whole brain emulation does lead to a slower takeoff, this could have benefits in terms of alleviating the control problem. A slower takeoff would also make a multipolar outcome more likely. But whether a multipolar outcome is desirable is very doubtful.

There is another important complication with the general idea that getting whole brain emulation first is safer: the need to cope with a

second transition

. Even if the first form of human-level machine intelligence is emulation-based, it would still remain feasible to develop artificial intelligence. AI in its mature form has important advantages over WBE, making AI the ultimately more powerful technology.

23

While mature AI would render WBE obsolete (except for the special purpose of preserving individual human minds), the reverse does not hold.

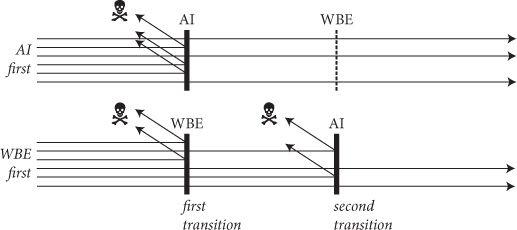

What this means is that if AI is developed first, there might be a single wave of the intelligence explosion. But if WBE is developed first, there may be two waves: first, the arrival of WBE; and later, the arrival of AI. The total existential risk along the WBE-first path is the

sum

of the risk in the first transition and the risk in the second transition (conditional on having made it through the first); see

Figure 13

.

24

How much safer would the AI transition be in a WBE world? One consideration is that the AI transition would be less explosive if it occurs after some form of machine intelligence has already been realized. Emulations, running at digital speeds and in numbers that might far exceed the biological human population, would reduce the cognitive differential, making it easier for emulations to control the AI. This consideration is not too weighty, since the gap between AI and WBE could still be wide. However, if the emulations were not just faster and more numerous but also somewhat qualitatively smarter than biological humans (or at least drawn from the top end of the human distribution) then the WBE-first scenario would have advantages paralleling those of human cognitive enhancement, which we discussed above.

Figure 13

Artificial intelligence or whole brain emulation first? In an AI-first scenario, there is one transition that creates an existential risk. In a WBE-first scenario, there are two risky transitions, first the development of WBE and then the development of AI. The total existential risk along the WBE-first scenario is the sum of these. However, the risk of an AI transition might be lower if it occurs in a world where WBE has already been successfully introduced.

Another consideration is that the transition to WBE would extend the lead of the frontrunner. Consider a scenario in which the frontrunner has a six-month lead over the closest follower in developing whole brain emulation technology. Suppose that the first emulations to be created are cooperative, safety-focused, and patient. If they run on fast hardware, these emulations could spend subjective eons pondering how to create safe AI. For example, if they run at a speedup of 100,000× and are able to work on the control problem undisturbed for six months of sidereal time, they could hammer away at the control problem for fifty millennia before facing competition from other emulations. Given sufficient hardware, they could hasten their progress by fanning out myriad copies to work independently on subproblems. If the frontrunner uses its six-month lead to form a singleton, it could buy its emulation AI-development team an unlimited amount of time to work on the control problem.

25

On balance, it looks like the risk of the AI transition would be reduced if WBE comes before AI. However, when we combine the residual risk in the AI transition with the risk of an antecedent WBE transition, it becomes very unclear how the total existential risk along the WBE-first path stacks up against the risk along the AI-first path. Only if one is quite pessimistic about biological humanity’s ability to manage an AI transition—after taking into account that human nature or civilization might have improved by the time we confront this challenge—should the WBE-first path seem attractive.

To figure out whether whole brain emulation technology should be promoted, there are some further important points to place in the balance. Most significantly, there is the technology coupling mentioned earlier: a push toward WBE could instead produce neuromorphic AI. This is a reason against pushing for WBE.

26

No doubt, there are

some

synthetic AI designs that are less safe than

some

neuromorphic designs. In expectation, however, it seems that neuromorphic designs are less safe. One ground for this is that imitation can substitute for understanding. To build something from the ground up one must usually have a reasonably good understanding of how the system will work. Such understanding may not be necessary to merely copy features of an existing system. Whole brain emulation relies on wholesale copying of biology, which may not require a comprehensive computational systems-level understanding of cognition (though a large amount of component-level understanding would undoubtedly be needed). Neuromorphic AI may be like whole brain emulation in this regard: it would be

achieved by cobbling together pieces plagiarized from biology without the engineers necessarily having a deep mathematical understanding of how the system works. But neuromorphic AI would be

unlike

whole brain emulation in another regard: it would not have human motivations by default.

27

This consideration argues against pursuing the whole brain emulation approach to the extent that it would likely produce neuromorphic AI.

A second point to put in the balance is that WBE is more likely to give us advance notice of its arrival. With AI it is always possible that somebody will make an unexpected conceptual breakthrough. WBE, by contrast, will require many laborious precursor steps—high-throughput scanning facilities, image processing software, detailed neural modeling work. We can therefore be confident that WBE is not imminent (not less than, say, fifteen or twenty years away). This means that efforts to accelerate WBE will make a difference mainly in scenarios in which machine intelligence is developed comparatively late. This could make WBE investments attractive to somebody who wants the intelligence explosion to preempt other existential risks but is wary of supporting AI for fear of triggering an intelligence explosion prematurely, before the control problem has been solved. However, the uncertainty over the relevant timescales is probably currently too large to enable this consideration to carry much weight.

28

A strategy of promoting WBE is thus most attractive if (a) one is very pessimistic about humans solving the control problem for AI, (b) one is not too worried about neuromorphic AI, multipolar outcomes, or the risks of a second transition, (c) one thinks that the default timing of WBE and AI is close, and (d) one prefers superintelligence to be developed neither very late nor very early.

I fear the blog commenter “washbash” may speak for many when he or she writes:

I instinctively think go faster. Not because I think this is better for the world. Why should I care about the world when I am dead and gone? I want it to go fast, damn it! This increases the chance I have of experiencing a more technologically advanced future.

29

From the person-affecting standpoint, we have greater reason to rush forward with all manner of radical technologies that could pose existential risks. This is because the default outcome is that almost everyone who now exists is dead within a century.

The case for rushing is especially strong with regard to technologies that could extend our lives and thereby increase the expected fraction of the currently existing population that may still be around for the intelligence explosion. If the machine intelligence revolution goes well, the resulting superintelligence could almost certainly devise means to indefinitely prolong the lives of the then still-existing humans, not only keeping them alive but restoring them to health and youthful vigor, and enhancing their capacities well beyond what we currently think of as the human range; or helping them shuffle off their mortal coils altogether by

uploading their minds to a digital substrate and endowing their liberated spirits with exquisitely good-feeling virtual embodiments. With regard to technologies that do not promise to save lives, the case for rushing is weaker, though perhaps still sufficiently supported by the hope of raised standards of living.

30

The same line of reasoning makes the person-affecting perspective favor many risky technological innovations that promise to hasten the onset of the intelligence explosion, even when those innovations are disfavored in the impersonal perspective. Such innovations could shorten the wolf hours during which we individually must hang on to our perch if we are to live to see the daybreak of the posthuman age. From the person-affecting standpoint, faster hardware progress thus seems desirable, as does faster progress toward WBE. Any adverse effect on existential risk is probably outweighed by the personal benefit of an increased chance of the intelligence explosion happening in the lifetime of currently existing people.

31

One important parameter is the degree to which the world will manage to coordinate and collaborate in the development of machine intelligence. Collaboration would bring many benefits. Let us take a look at how this parameter might affect the outcome and what levers we might have for increasing the extent and intensity of collaboration.