Read Superintelligence: Paths, Dangers, Strategies Online

Authors: Nick Bostrom

Tags: #Science, #Philosophy, #Non-Fiction

Superintelligence: Paths, Dangers, Strategies (2 page)

Lists of Figures, Tables, and Boxes

1. Past developments and present capabilities

Opinions about the future of machine intelligence

Sources of advantage for digital intelligence

4. The kinetics of an intelligence explosion

Timing and speed of the takeoff

Non-machine intelligence paths

Optimization power and explosivity

5. Decisive strategic advantage

Will the frontrunner get a decisive strategic advantage?

How large will the successful project be?

From decisive strategic advantage to singleton

Functionalities and superpowers

The relation between intelligence and motivation

8. Is the default outcome doom?

Existential catastrophe as the default outcome of an intelligence explosion?

10. Oracles, genies, sovereigns, tools

The Malthusian principle in a historical perspective

Population growth and investment

Life in an algorithmic economy

Voluntary slavery, casual death

Would maximally efficient work be fun?

Evolution is not necessarily up

Post-transition formation of a singleton?

Superorganisms and scale economies

13. Choosing the criteria for choosing

The need for indirect normativity

Coherent extrapolated volition

Science and technology strategy

Differential technological development

Rates of change and cognitive enhancement

Should whole brain emulation research be promoted?

The person-affecting perspective favors speed

The race dynamic and its perils

On the benefits of collaboration

1. Long-term history of world GDP

.

2. Overall long-term impact of HLMI

.

4. Reconstructing 3D neuroanatomy from electron microscope images

.

5. Whole brain emulation roadmap

.

6. Composite faces as a metaphor for spell-checked genomes

.

8. A less anthropomorphic scale?

9. One simple model of an intelligence explosion

.

10. Phases in an AI takeover scenario

.

11. Schematic illustration of some possible trajectories for a hypothetical wise singleton

.

12. Results of anthropomorphizing alien motivation

.

13. Artificial intelligence or whole brain emulation first?

14. Risk levels in AI technology races

.

2. When will human-level machine intelligence be attained?

3. How long from human level to superintelligence?

4. Capabilities needed for whole brain emulation

5. Maximum IQ gains from selecting among a set of embryos

6. Possible impacts from genetic selection in different scenarios

7. Some strategically significant technology races

8. Superpowers: some strategically relevant tasks and corresponding skill sets

9. Different kinds of tripwires

11. Features of different system castes

12. Summary of value-loading techniques

3. What would it take to recapitulate evolution?

4. On the kinetics of an intelligence explosion

5. Technology races: some historical examples

6. The mail-ordered DNA scenario

7. How big is the cosmic endowment?

9. Strange solutions from blind search

10. Formalizing value learning

11. An AI that wants to be friendly

Past developments and present capabilities

We begin by looking back. History, at the largest scale, seems to exhibit a sequence of distinct growth modes, each much more rapid than its predecessor. This pattern has been taken to suggest that another (even faster) growth mode might be possible. However, we do not place much weight on this observation—this is not a book about “technological acceleration” or “exponential growth” or the miscellaneous notions sometimes gathered under the rubric of “the singularity.” Next, we review the history of artificial intelligence. We then survey the field’s current capabilities. Finally, we glance at some recent expert opinion surveys, and contemplate our ignorance about the timeline of future advances.

A mere few million years ago our ancestors were still swinging from the branches in the African canopy. On a geological or even evolutionary timescale, the rise of

Homo sapiens

from our last common ancestor with the great apes happened swiftly. We developed upright posture, opposable thumbs, and—crucially—some relatively minor changes in brain size and neurological organization that led to a great leap in cognitive ability. As a consequence, humans can think abstractly, communicate complex thoughts, and culturally accumulate information over the generations far better than any other species on the planet.

These capabilities let humans develop increasingly efficient productive technologies, making it possible for our ancestors to migrate far away from the rainforest and the savanna. Especially after the adoption of agriculture, population densities rose along with the total size of the human population. More people meant more ideas; greater densities meant that ideas could spread more readily and that some individuals could devote themselves to developing specialized skills. These

developments increased the

rate of growth

of economic productivity and technological capacity. Later developments, related to the Industrial Revolution, brought about a second, comparable step change in the rate of growth.

Such changes in the rate of growth have important consequences. A few hundred thousand years ago, in early human (or hominid) prehistory, growth was so slow that it took on the order of one million years for human productive capacity to increase sufficiently to sustain an additional one million individuals living at subsistence level. By 5000

BC

, following the Agricultural Revolution, the rate of growth had increased to the point where the same amount of growth took just two centuries. Today, following the Industrial Revolution, the world economy grows on average by that amount every ninety minutes.

1

Even the present rate of growth will produce impressive results if maintained for a moderately long time. If the world economy continues to grow at the same pace as it has over the past fifty years, then the world will be some 4.8 times richer by 2050 and about 34 times richer by 2100 than it is today.

2

Yet the prospect of continuing on a steady exponential growth path pales in comparison to what would happen if the world were to experience another step change in the

rate of growth

comparable in magnitude to those associated with the Agricultural Revolution and the Industrial Revolution. The economist Robin Hanson estimates, based on historical economic and population data, a characteristic world economy doubling time for Pleistocene hunter–gatherer society of 224,000 years; for farming society, 909 years; and for industrial society, 6.3 years.

3

(In Hanson’s model, the present epoch is a mixture of the farming and the industrial growth modes—the world economy as a whole is not yet growing at the 6.3-year doubling rate.) If another such transition to a different growth mode were to occur, and it were of similar magnitude to the previous two, it would result in a new growth regime in which the world economy would double in size about every two weeks.

Such a growth rate seems fantastic by current lights. Observers in earlier epochs might have found it equally preposterous to suppose that the world economy would one day be doubling several times within a single lifespan. Yet that is the extraordinary condition we now take to be ordinary.

The idea of a coming technological singularity has by now been widely popularized, starting with Vernor Vinge’s seminal essay and continuing with the writings of Ray Kurzweil and others.

4

The term “singularity,” however, has been used confusedly in many disparate senses and has accreted an unholy (yet almost millenarian) aura of techno-utopian connotations.

5

Since most of these meanings and connotations are irrelevant to our argument, we can gain clarity by dispensing with the “singularity” word in favor of more precise terminology.

The singularity-related idea that interests us here is the possibility of an

intelligence explosion

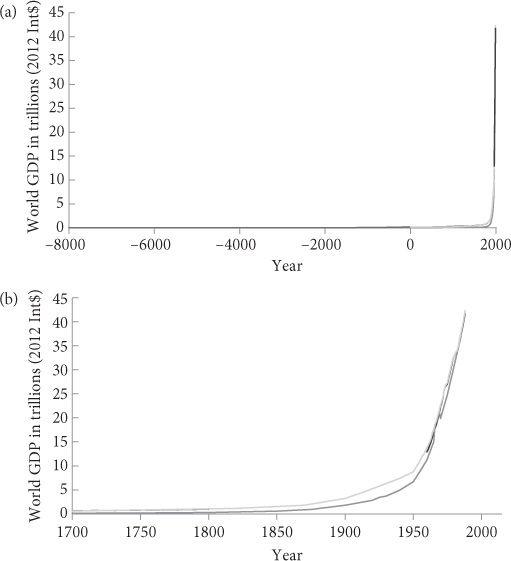

, particularly the prospect of machine superintelligence. There may be those who are persuaded by growth diagrams like the ones in

Figure 1

that another drastic change in growth mode is in the cards, comparable to the Agricultural or Industrial Revolution. These folk may then reflect that it is hard

to conceive of a scenario in which the world economy’s doubling time shortens to mere weeks that does not involve the creation of minds that are much faster and more efficient than the familiar biological kind. However, the case for taking seriously the prospect of a machine intelligence revolution need not rely on curve-fitting exercises or extrapolations from past economic growth. As we shall see, there are stronger reasons for taking heed.

Figure 1

Long-term history of world GDP. Plotted on a linear scale, the history of the world economy looks like a flat line hugging the

x

-axis, until it suddenly spikes vertically upward. (a) Even when we zoom in on the most recent 10,000 years, the pattern remains essentially one of a single 90° angle. (b) Only within the past 100 years or so does the curve lift perceptibly above the zero-level. (The different lines in the plot correspond to different data sets, which yield slightly different estimates.

6

)

Machines matching humans in general intelligence—that is, possessing common sense and an effective ability to learn, reason, and plan to meet complex information-processing challenges across a wide range of natural and abstract domains—have been expected since the invention of computers in the 1940s. At that time, the advent of such machines was often placed some twenty years into

the future.

7

Since then, the expected arrival date has been receding at a rate of one year per year; so that today, futurists who concern themselves with the possibility of artificial general intelligence still often believe that intelligent machines are a couple of decades away.

8

Two decades is a sweet spot for prognosticators of radical change: near enough to be attention-grabbing and relevant, yet far enough to make it possible to suppose that a string of breakthroughs, currently only vaguely imaginable, might by then have occurred. Contrast this with shorter timescales: most technologies that will have a big impact on the world in five or ten years from now are already in limited use, while technologies that will reshape the world in less than fifteen years probably exist as laboratory prototypes. Twenty years may also be close to the typical duration remaining of a forecaster’s career, bounding the reputational risk of a bold prediction.

From the fact that some individuals have overpredicted artificial intelligence in the past, however, it does not follow that AI is impossible or will never be developed.

9

The main reason why progress has been slower than expected is that the technical difficulties of constructing intelligent machines have proved greater than the pioneers foresaw. But this leaves open just how great those difficulties are and how far we now are from overcoming them. Sometimes a problem that initially looks hopelessly complicated turns out to have a surprisingly simple solution (though the reverse is probably more common).

In the next chapter, we will look at different paths that may lead to human-level machine intelligence. But let us note at the outset that however many stops there are between here and human-level machine intelligence, the latter is not the final destination. The next stop, just a short distance farther along the tracks, is superhuman-level machine intelligence. The train might not pause or even decelerate at Humanville Station. It is likely to swoosh right by.

The mathematician I. J. Good, who had served as chief statistician in Alan Turing’s code-breaking team in World War II, might have been the first to enunciate the essential aspects of this scenario. In an oft-quoted passage from 1965, he wrote:

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

10

It may seem obvious now that major existential risks would be associated with such an intelligence explosion, and that the prospect should therefore be examined with the utmost seriousness even if it were known (which it is not) to have but a moderately small probability of coming to pass. The pioneers of artificial intelligence, however, notwithstanding their belief in the imminence of human-level

AI, mostly did not contemplate the possibility of greater-than-human AI. It is as though their speculation muscle had so exhausted itself in conceiving the radical possibility of machines reaching human intelligence that it could not grasp the corollary—that machines would subsequently become superintelligent.

The AI pioneers for the most part did not countenance the possibility that their enterprise might involve risk.

11

They gave no lip service—let alone serious thought—to any safety concern or ethical qualm related to the creation of artificial minds and potential computer overlords: a lacuna that astonishes even against the background of the era’s not-so-impressive standards of critical technology assessment.

12

We must hope that by the time the enterprise eventually does become feasible, we will have gained not only the technological proficiency to set off an intelligence explosion but also the higher level of mastery that may be necessary to make the detonation survivable.

But before we turn to what lies ahead, it will be useful to take a quick glance at the history of machine intelligence to date.

In the summer of 1956 at Dartmouth College, ten scientists sharing an interest in neural nets, automata theory, and the study of intelligence convened for a six-week workshop. This Dartmouth Summer Project is often regarded as the cockcrow of artificial intelligence as a field of research. Many of the participants would later be recognized as founding figures. The optimistic outlook among the delegates is reflected in the proposal submitted to the Rockefeller Foundation, which provided funding for the event:

We propose that a 2 month, 10 man study of artificial intelligence be carried out…. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines that use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.

In the six decades since this brash beginning, the field of artificial intelligence has been through periods of hype and high expectations alternating with periods of setback and disappointment.

The first period of excitement, which began with the Dartmouth meeting, was later described by John McCarthy (the event’s main organizer) as the “Look, Ma, no hands!” era. During these early days, researchers built systems designed to refute claims of the form “No machine could ever do

X

!” Such skeptical claims were common at the time. To counter them, the AI researchers created small systems that achieved

X

in a “microworld” (a well-defined, limited domain that enabled

a pared-down version of the performance to be demonstrated), thus providing a proof of concept and showing that

X

could, in principle, be done by machine. One such early system, the Logic Theorist, was able to prove most of the theorems in the second chapter of Whitehead and Russell’s

Principia Mathematica

, and even came up with one proof that was much more elegant than the original, thereby debunking the notion that machines could “only think numerically” and showing that machines were also able to do deduction and to invent logical proofs.

13

A follow-up program, the General Problem Solver, could in principle solve a wide range of formally specified problems.

14

Programs that could solve calculus problems typical of first-year college courses, visual analogy problems of the type that appear in some IQ tests, and simple verbal algebra problems were also written.

15

The Shakey robot (so named because of its tendency to tremble during operation) demonstrated how logical reasoning could be integrated with perception and used to plan and control physical activity.

16

The ELIZA program showed how a computer could impersonate a Rogerian psychotherapist.

17

In the mid-seventies, the program SHRDLU showed how a simulated robotic arm in a simulated world of geometric blocks could follow instructions and answer questions in English that were typed in by a user.

18

In later decades, systems would be created that demonstrated that machines could compose music in the style of various classical composers, outperform junior doctors in certain clinical diagnostic tasks, drive cars autonomously, and make patentable inventions.

19

There has even been an AI that cracked original jokes.

20

(Not that its level of humor was high—“What do you get when you cross an

optic

with a

mental object

? An

eye

-dea”—but children reportedly found its puns consistently entertaining.)