In Pursuit of the Unknown (60 page)

Read In Pursuit of the Unknown Online

Authors: Ian Stewart

Building yet again on analogies with ecosystems, Haldane and May offer some examples of how stability might be enhanced. Some correspond to the regulators' own instincts, such as requiring banks to hold more capital, which buffers them against shocks. Others do not; an example is the suggestion that regulators should focus not on the risks associated with individual banks, but on those associated with the entire financial system. The complexity of the derivatives market could be reduced by requiring all transactions to pass through a centralised clearing agency. This would have to be extremely robust, supported by all major nations, but if it were, then propagating shocks would be damped down as they passed through it.

Another suggestion is increased diversity of trading methods and risk assessment. An ecological monoculture is unstable because any shock that occurs is likely to affect everything simultaneously, in the same way. When all banks are using the same methods to assess risk, the same problem arises: when they get it wrong, they all get it wrong at the same time. The financial crisis arose in part because all of the main banks were funding their potential liabilities in the same way, assessing the value of their assets in the same way, and assessing their likely risk in the same way.

The final suggestion is modularity. It is thought that ecosystems stabilise themselves by organising (through evolution) into more or less self-contained modules, connected to each other in a fairly simple manner. Modularity helps to prevent shocks propagating. This is why regulators worldwide are giving serious consideration to breaking up big banks and replacing them by a number of smaller ones. As Alan Greenspan, a distinguished American economist and former chairman of the Federal Reserve of the USA said of banks: âIf they're too big to fail, they're too big.'

Was an equation to blame for the financial crash, then?

An equation is a tool, and like any tool, it has to be wielded by someone how knows how to use it, and for the right purpose. The BlackâScholes equation may have contributed to the crash, but only because it was abused. It was no more responsible for the disaster than a trader's computer would have been if its use led to a catastrophic loss. The blame

for the failure of tools should rest with those who are responsible for their use. There is a danger that the financial sector may turn its back on mathematical analysis, when what it actually needs is a better range of models, and â crucially â a solid understanding of their limitations. The financial system is too complex to be run on human hunches and vague reasoning. It desperately needs

more

mathematics, not less. But it also needs to learn how to use mathematics intelligently, rather than as some kind of magical talisman.

W

hen someone writes down an equation, there isn't a sudden clap of thunder after which everything is different. Most equations have little or no effect (I write them down all the time, and believe me, I know). But even the greatest and most influential equations need help to change the world â efficient ways to solve them, people with the imagination and drive to exploit what they tell us, machinery, resources, materials, money. Bearing this in mind, equations have repeatedly opened up new directions for humanity, and acted as our guides as we explore them.

It took a lot more than seventeen equations to get us where we are today. My list is a selection of some of the most influential, and each of them required a host of others before it became seriously useful. But each of the seventeen fully deserves inclusion, because it played a pivotal role in history. Pythagoras led to practical methods for surveying our lands and navigating our way to new ones. Newton tells us how planets move and how to send space probes to explore them. Maxwell provided a vital clue that led to radio, TV, and modern communications. Shannon derived unavoidable limits to how efficient those communications can be.

Often, what an equation led to was quite different from what interested its inventor/discoverers. Who would have predicted in the fifteenth century that a baffling, apparently impossible number, stumbled upon while solving algebra problems, would be indelibly linked to the even more baffling and apparently impossible world of quantum physics â let alone that this would pave the road to miraculous devices that can solve a million algebra problems every second, and let us instantly be seen and heard by friends on the other side of the planet? How would Fourier have reacted if he had been told that his new method for studying heat flow would be built into machines the size of a pack of cards, able to paint extraordinarily accurate and detailed pictures of anything they are pointed at â in colour, even

moving

, with thousands of them contained in something the size of a coin?

Equations trigger events, and events, to paraphrase former British Prime Minister Harold Macmillan, are what keep us awake at night. When

a revolutionary equation is unleashed, it develops a life of its own. The consequences can be good or bad, even when the original intention was benevolent, as it was for every one of my seventeen. Einstein's new physics gave us a new understanding of the world, but one of the things we used it for was nuclear weapons. Not as directly as popular myth claims, but it played its part nonetheless. The BlackâScholes equation created a vibrant financial sector and then threatened to destroy it. Equations are what we make of them, and the world can be changed for the worse as well as for the better.

Equations come in many kinds. Some are mathematical truths, tautologies: think of Napier's logarithms. But tautologies can still be powerful aids to human thought and deed. Some are statements about the physical world, which for all we know could have been different. Equations of this kind tell us nature's laws, and solving them tells us the consequences of those laws. Some have both elements: Pythagoras's equation is a theorem in Euclid's geometry, but it also governs measurements made by surveyors and navigators. Some are little better than definitions â but i and information tell us a great deal, once we have defined them.

Some equations are universally valid. Some describe the world very accurately, but not perfectly. Some are less accurate, confined to more limited realms, yet offer vital insights. Some are basically plain wrong, yet they can act as stepping-stones to something better. They may still have a huge effect.

Some even open up difficult questions, philosophical in nature, about the world we live in and our own place within it. The problem of quantum measurement, dramatised by Schrödinger's hapless cat, is one such. The second law of thermodynamics raises deep issues about disorder and the arrow of time. In both cases, some of the apparent paradoxes can be resolved, in part, by thinking less about the content of the equation and more about the context in which it applies. Not the symbols, but the boundary conditions. The arrow of time is not a problem about entropy: it's a problem about the context in which we

think

about entropy.

Existing equations can acquire new importance. The search for fusion power, as a clean alternative to nuclear power and fossil fuels, requires an understanding of how extremely hot gas, forming a plasma, moves in a magnetic field. The atoms of the gas lose electrons and become electrically charged. So the problem is one in magnetohydrodynamics, requiring a combination of the existing equations for fluid flow and for electromagnetism. The combination leads to new phenomena,

suggesting how to keep the plasma stable at the temperatures needed to produce fusion. The equations are old favourites.

There is (or may be) one equation, above all, that physicists and cosmologists would give their eye teeth to lay hands on: a Theory of Everything, which in Einstein's day was called a Unified Field Theory. This is the long-sought equation that unifies quantum mechanics and relativity, and Einstein spent his later years in a fruitless quest to find it. These two theories are both successful, but their successes occur in different domains: the very small and the very large. When they overlap, they are incompatible. For example, quantum mechanics is linear, relativity isn't. Wanted: an equation that explains why both are so successful, but does the job of both with no logical inconsistencies. There are many candidates for a Theory of Everything, the best known being the theory of superstrings. This, among other things, introduces extra dimensions of space: six of them, seven in some versions. Superstrings are mathematically elegant, but there is no convincing evidence for them as a description of nature. In any case, it is desperately hard to carry out the calculations needed to extract quantitative predictions from superstring theory.

For all we know, there may not be a Theory of Everything. All of our equations for the physical world may just be oversimplified models, describing limited realms of nature in a way that we can understand, but not capturing the deep structure of reality. Even if nature truly obeys rigid laws, they might not be expressible as equations.

Even if equations are relevant, they need not be simple. They might be so complicated that we can't even write them down. The 3 billion DNA bases of the human genome are, in a sense, part of the equation for a human being. They are parameters that might be inserted into a more general equation for biological development. It is (barely) possible to print the genome on paper; it would need about two thousand books the size of this one. It fits into a computer memory fairly easily. But it's only one tiny part of any hypothetical human equation.

When equations become that complex, we need help. Computers are already extracting equations from big sets of data, in circumstances where the usual human methods fail or are too opaque to be useful. A new approach called evolutionary computing extracts significant patterns: specifically, formulas for conserved quantities â things that don't change. One such system called Eureqa, formulated by Michael Schmidt and Hod Lipson, has scored some successes. Software like this might help. Or it might not lead anywhere that really matters.

Some scientists, especially those with backgrounds in computing,

think that it's time we abandoned traditional equations altogether, especially continuum ones like ordinary and partial differential equations. The future is discrete, it comes in whole numbers, and the equations should give way to algorithms â recipes for calculating things. Instead of solving the equations, we should simulate the world digitally by running the algorithms. Indeed, the world itself may

be

digital. Stephen Wolfram made a case for this view in his controversial book

A New Kind of Science

, which advocates a type of complex system called a cellular automaton. This is an array of cells, typically small squares, each existing in a variety of distinct states. The cells interact with their neighbours according to fixed rules. They look a bit like an eighties computer game, with coloured blocks chasing each other over the screen.

Wolfram puts forward several reasons why cellular automata should be superior to traditional mathematical equations. In particular, some of them can carry out any calculation that could be performed by a computer, the simplest being the famous Rule 110 automaton. This can find successive digits of Ï, solve the three-body equations numerically, implement the BlackâScholes formula for a call option â whatever. Traditional methods for solving equations are more limited. I don't find this argument terribly convincing, because it is also true that any cellular automaton can be simulated by a traditional dynamical system. What counts is not whether one mathematical system can simulate another, but which is most effective for solving problems or providing insights. It's quicker to sum a traditional series for Ï by hand than it is to calculate the same number of digits using the Rule 110 automaton.

However, it is still entirely credible that we might soon find new laws of nature based on discrete, digital structures and systems. The future may consist of algorithms, not equations. But until that day dawns, if ever, our greatest insights into nature's laws take the form of equations, and we should learn to understand them and appreciate them. Equations have a track record. They really have changed the world â and they will change it again.

Chapter 1

1

The Penguin Book of Curious and Interesting Mathematics

by David Wells quotes a brief form of the joke: An Indian chief had three wives who were preparing to give birth, one on a buffalo hide, one on a bear hide, and the third on a hippopotamus hide. In due course, the first gave him a son, the second a daughter, and the third, twins, a boy and a girl, thereby illustrating the well-known theorem that the squaw on the hippopotamus is equal to the sum of the squaws on the other two hides. The joke goes back at least to the mid-1950s, when it was broadcast in the BBC radio series âMy Word', hosted by comedy scriptwriters Frank Muir and Denis Norden.

2

Quoted without reference on:

http://www-history.mcs.st-and.ac.uk/HistTopics/Babylonian_Pythagoras.html

3

A. Sachs, A. Goetze, and O. Neugebauer.

Mathematical Cuneiform Texts

, American Oriental Society, New Haven 1945.

4

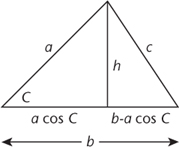

The figure is repeated for convenience in Figure 60.