The Singularity Is Near: When Humans Transcend Biology (11 page)

Read The Singularity Is Near: When Humans Transcend Biology Online

Authors: Ray Kurzweil

Tags: #Non-Fiction, #Fringe Science, #Retail, #Technology, #Amazon.com

Generally, by the time a paradigm approaches its asymptote in price-performance, the next technical paradigm is already working in niche applications. For example, in the 1950s engineers were shrinking vacuum tubes to provide greater price-performance for computers, until the process became no longer feasible. At this point, around 1960, transistors had already achieved a strong niche market in portable radios and were subsequently used to replace vacuum tubes in computers.

The resources underlying the exponential growth of an evolutionary process are relatively unbounded. One such resource is the (ever-growing) order of the evolutionary process itself (since, as I pointed out, the products of an evolutionary process continue to grow in order). Each stage of evolution provides more powerful tools for the next. For example, in biological evolution, the advent of DNA enabled more powerful and faster evolutionary “experiments.” Or to take a more recent example, the advent of computer-assisted design tools allows rapid development of the next generation of computers.

The other required resource for continued exponential growth of order is the “chaos” of the environment in which the evolutionary process takes place and which provides the options for further diversity. The chaos provides the variability to permit an evolutionary process to discover more powerful and efficient solutions. In biological evolution, one source of diversity is the mixing

and matching of gene combinations through sexual reproduction. Sexual reproduction itself was an evolutionary innovation that accelerated the entire process of biological adaptation and provided for greater diversity of genetic combinations than nonsexual reproduction. Other sources of diversity are mutations and ever-changing environmental conditions. In technological evolution, human ingenuity combined with variable market conditions keeps the process of innovation going.

Fractal Designs

. A key question concerning the information content of biological systems is how it is possible for the genome, which contains comparatively little information, to produce a system such as a human, which is vastly more complex than the genetic information that describes it. One way of understanding this is to view the designs of biology as “probabilistic fractals.” A deterministic fractal is a design in which a single design element (called the “initiator”) is replaced with multiple elements (together called the “generator”). In a second iteration of fractal expansion, each element in the generator itself becomes an initiator and is replaced with the elements of the generator (scaled to the smaller size of the second-generation initiators). This process is repeated many times, with each newly created element of a generator becoming an initiator and being replaced with a new scaled generator. Each new generation of fractal expansion adds apparent complexity but requires no additional design information. A probabilistic fractal adds the element of uncertainty. Whereas a deterministic fractal will look the same every time it is rendered, a probabilistic fractal will look different each time, although with similar characteristics. In a probabilistic fractal, the probability of each generator element being applied is less than 1. In this way, the resulting designs have a more organic appearance. Probabilistic fractals are used in graphics programs to generate realistic-looking images of mountains, clouds, seashores, foliage, and other organic scenes. A key aspect of a probabilistic fractal is that it enables the generation of a great deal of apparent complexity, including extensive varying detail, from a relatively small amount of design information. Biology uses this same principle. Genes supply the design information, but the detail in an organism is vastly greater than the genetic design information.

Some observers misconstrue the amount of detail in biological systems such as the brain by arguing, for example, that the exact configuration of every microstructure (such as each tubule) in each neuron is precisely designed and must be exactly the way it is for the system to function. In order to understand how a biological system such as the brain works, however, we need to understand its design principles, which are far simpler (that is, contain far less information)

than the extremely detailed structures that the genetic information generates through these iterative, fractal-like processes. There are only eight hundred million bytes of information in the entire human genome, and only about thirty to one hundred million bytes after data compression is applied. This is about one hundred million times less information than is represented by all of the interneuronal connections and neurotransmitter concentration patterns in a fully formed human brain.

Consider how the principles of the law of accelerating returns apply to the epochs we discussed in the first chapter. The combination of amino acids into proteins and of nucleic acids into strings of RNA established the basic paradigm of biology. Strings of RNA (and later DNA) that self-replicated (Epoch Two) provided a digital method to record the results of evolutionary experiments. Later on, the evolution of a species that combined rational thought (Epoch Three) with an opposable appendage (the thumb) caused a fundamental paradigm shift from biology to technology (Epoch Four). The upcoming primary paradigm shift will be from biological thinking to a hybrid combining biological and nonbiological thinking (Epoch Five), which will include “biologically inspired” processes resulting from the reverse engineering of biological brains.

If we examine the timing of these epochs, we see that they have been part of a continuously accelerating process. The evolution of life-forms required billions of years for its first steps (primitive cells, DNA), and then progress accelerated. During the Cambrian explosion, major paradigm shifts took only tens of millions of years. Later, humanoids developed over a period of millions of years, and

Homo sapiens

over a period of only hundreds of thousands of years. With the advent of a technology-creating species the exponential pace became too fast for evolution through DNA-guided protein synthesis, and evolution moved on to human-created technology. This does not imply that biological (genetic) evolution is not continuing, just that it is no longer leading the pace in terms of improving order (or of the effectiveness and efficiency of computation).

16

Farsighted Evolution

. There are many ramifications of the increasing order and complexity that have resulted from biological evolution and its continuation through technology. Consider the boundaries of observation. Early biological life could observe local events several millimeters away, using chemical gradients. When sighted animals evolved, they were able to observe events that were miles away. With the invention of the telescope, humans could see other galaxies millions of light-years away. Conversely, using microscopes, they could

also see cellular-size structures. Today humans armed with contemporary technology can see to the edge of the observable universe, a distance of more than thirteen billion light-years, and down to quantum-scale subatomic particles.

Consider the duration of observation. Single-cell animals could remember events for seconds, based on chemical reactions. Animals with brains could remember events for days. Primates with culture could pass down information through several generations. Early human civilizations with oral histories were able to preserve stories for hundreds of years. With the advent of written language the permanence extended to thousands of years.

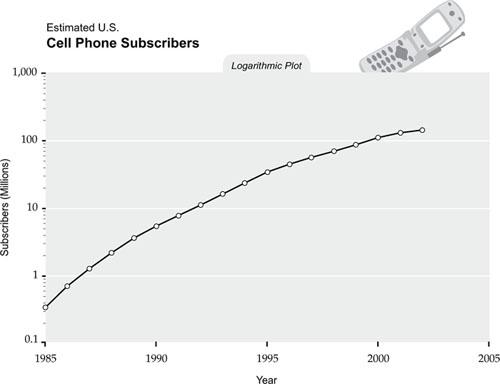

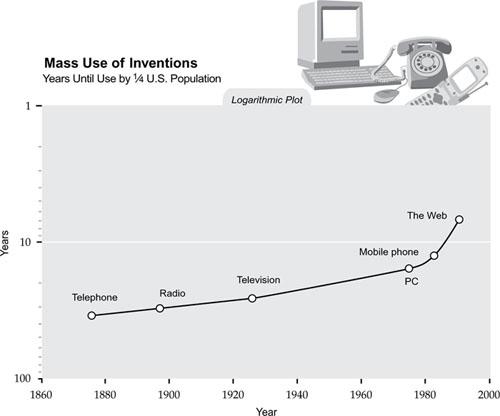

As one of many examples of the acceleration of the technology paradigm-shift rate, it took about a half century for the late-nineteenth-century invention of the telephone to reach significant levels of usage (see the figure below).

17

In comparison, the late-twentieth-century adoption of the cell phone took only a decade.

18

Overall we see a smooth acceleration in the adoption rates of communication technologies over the past century.

19

As discussed in the previous chapter, the overall rate of adopting new paradigms, which parallels the rate of technological progress, is currently doubling every decade. That is, the time to adopt new paradigms is going down by half each decade. At this rate, technological progress in the twenty-first century will be equivalent (in the linear view) to two hundred centuries of progress (at the rate of progress in 2000).

20

,

21

The S-Curve of a Technology as Expressed in Its Life Cycle

A machine is as distinctively and brilliantly and expressively human as a violin sonata or a theorem in Euclid

.

—G

REGORY

V

LASTOS

It is a far cry from the monkish calligrapher, working in his cell in silence, to the brisk “click, click” of the modern writing machine, which in a quarter of a century has revolutionized and reformed business

.

—

S

CIENTIFIC

A

MERICAN

, 1905

No communication technology has ever disappeared, but instead becomes increasingly less important as the technological horizon widens

.

—A

RTHUR

C. C

LARKE

I always keep a stack of books on my desk that I leaf through when I run out of ideas, feel restless, or otherwise need a shot of inspiration. Picking up a fat volume that I recently acquired, I consider the bookmaker’s craft: 470 finely printed pages organized into 16-page signatures, all of which are sewn together with white thread and glued onto a gray canvas cord. The hard linen-bound covers, stamped with gold letters, are connected to the signature block by delicately embossed end sheets. This is a technology that was perfected many decades ago. Books constitute such an integral element of our society—both reflecting and shaping its culture—that it is hard to imagine life without them. But the printed book, like any other technology, will not live forever.

The Life Cycle of a Technology

We can identify seven distinct stages in the life cycle of a technology.

- During the precursor stage, the prerequisites of a technology exist, and dreamers may contemplate these elements coming together. We do not, however, regard dreaming to be the same as inventing, even if the dreams are written down. Leonardo da Vinci drew convincing pictures of airplanes and automobiles, but he is not considered to have invented either.

- The next stage, one highly celebrated in our culture, is invention, a very brief stage, similar in some respects to the process of birth

after an extended period of labor. Here the inventor blends curiosity, scientific skills, determination, and usually a measure of showmanship to combine methods in a new way and brings a new technology to life. - The next stage is development, during which the invention is protected and supported by doting guardians (who may include the original inventor). Often this stage is more crucial than invention and may involve additional creation that can have greater significance than the invention itself. Many tinkerers had constructed finely hand-tuned horseless carriages, but it was Henry Ford’s innovation of mass production that enabled the automobile to take root and flourish.

- The fourth stage is maturity. Although continuing to evolve, the technology now has a life of its own and has become an established part of the community. It may become so interwoven in the fabric of life that it appears to many observers that it will last forever. This creates an interesting drama when the next stage arrives, which I call the stage of the false pretenders.

- Here an upstart threatens to eclipse the older technology. Its enthusiasts prematurely predict victory. While providing some distinct benefits, the newer technology is found on reflection to be lacking some key element of functionality or quality. When it indeed fails to dislodge the established order, the technology conservatives take this as evidence that the original approach will indeed live forever.

- This is usually a short-lived victory for the aging technology. Shortly thereafter, another new technology typically does succeed in rendering the original technology to the stage of obsolescence. In this part of the life cycle, the technology lives out its senior years in gradual decline, its original purpose and functionality now subsumed by a more spry competitor.

- In this stage, which may comprise 5 to 10 percent of a technology’s life cycle, it finally yields to antiquity (as did the horse and buggy, the harpsichord, the vinyl record, and the manual typewriter).

In the mid-nineteenth century there were several precursors to the phonograph, including Léon Scott de Martinville’s phonautograph, a device that recorded sound vibrations as a printed pattern. It was Thomas Edison, however, who brought all of the elements together and invented the first device that could both record and reproduce sound in 1877. Further refinements were necessary for the phonograph to become commercially

viable. It became a fully mature technology in 1949 when Columbia introduced the 33-rpm long-playing record (LP) and RCA Victor introduced the 45-rpm disc. The false pretender was the cassette tape, introduced in the 1960s and popularized during the 1970s. Early enthusiasts predicted that its small size and ability to be rerecorded would make the relatively bulky and scratchable record obsolete.

Despite these obvious benefits, cassettes lack random access and are prone to their own forms of distortion and lack of fidelity. The compact disc (CD) delivered the mortal blow. With the CD providing both random access and a level of quality close to the limits of the human auditory system, the phonograph record quickly entered the stage of obsolescence. Although still produced, the technology that Edison gave birth to almost 130 years ago has now reached antiquity.

Consider the piano, an area of technology that I have been personally involved with replicating. In the early eighteenth century Bartolommeo Cristofori was seeking a way to provide a touch response to the then-popular harpsichord so that the volume of the notes would vary with the intensity of the touch of the performer. Called

gravicembalo col piano e forte

(“harpsichord with soft and loud”), his invention was not an immediate success. Further refinements, including Stein’s Viennese action and Zumpe’s English action, helped to establish the “piano” as the preeminent keyboard instrument. It reached maturity with the development of the complete cast-iron frame, patented in 1825 by Alpheus Babcock, and has seen only subtle refinements since then. The false pretender was the electric piano of the early 1980s. It offered substantially greater functionality. Compared to the single (piano) sound of the acoustic piano, the electronic variant offered dozens of instrument sounds, sequencers that allowed the user to play an entire orchestra at once, automated accompaniment, educational programs to teach keyboard skills, and many other features. The only feature it was missing was a good-quality piano sound.

This crucial flaw and the resulting failure of the first generation of electronic pianos led to the widespread conclusion that the piano would never be replaced by electronics. But the “victory” of the acoustic piano will not be permanent. With their far greater range of features and price-performance, digital pianos already exceed the sales of acoustic pianos in homes. Many observers feel that the quality of the “piano” sound on digital pianos now equals or exceeds that of the upright acoustic piano. With the exception of concert and luxury grand pianos (a small part of the market), the sale of acoustic pianos is in decline.

From Goat Skins to Downloads

So where in the technology life cycle is the book? Among its precursors were Mesopotamian clay tablets and Egyptian papyrus scrolls. In the second century B.C., the Ptolemies of Egypt created a great library of scrolls at Alexandria and outlawed the export of papyrus to discourage competition.

What were perhaps the first books were created by Eumenes II, ruler of ancient Greek Pergamum, using pages of vellum made from the skins of goats and sheep, which were sewn together between wooden covers. This technique enabled Eumenes to compile a library equal to that of Alexandria. Around the same time, the Chinese had also developed a crude form of book made from bamboo strips.

The development and maturation of books has involved three great advances. Printing, first experimented with by the Chinese in the eighth century A.D. using raised wood blocks, allowed books to be reproduced in much larger quantities, expanding their audience beyond government and religious leaders. Of even greater significance was the advent of movable type, which the Chinese and Koreans experimented with by the eleventh century, but the complexity of Asian characters prevented these early attempts from being fully successful. Johannes Gutenberg, working in the fifteenth century, benefited from the relative simplicity of the Roman character set. He produced his Bible, the first large-scale work printed entirely with movable type, in 1455.

While there has been a continual stream of evolutionary improvements in the mechanical and electromechanical process of printing, the technology of bookmaking did not see another qualitative leap until the availability of computer typesetting, which did away with movable type about two decades ago. Typography is now regarded as a part of digital image processing.

With books a fully mature technology, the false pretenders arrived about twenty years ago with the first wave of “electronic books.” As is usually the case, these false pretenders offered dramatic qualitative and quantitative benefits. CD-ROM- or flash memory–based electronic books can provide the equivalent of thousands of books with powerful computer-based search and knowledge navigation features. With Web-or CD-ROM- and DVD-based encyclopedias, I can perform rapid word searches using extensive logic rules, something that is just not possible with the thirty-three-volume “book” version I possess. Electronic books can provide pictures that are animated and that respond to our input. Pages

are not necessarily ordered sequentially but can be explored along more intuitive connections.

As with the phonograph record and the piano, this first generation of false pretenders was (and still is) missing an essential quality of the original, which in this case is the superb visual characteristics of paper and ink. Paper does not flicker, whereas the typical computer screen is displaying sixty or more fields per second. This is a problem because of an evolutionary adaptation of the primate visual system. We are able to see only a very small portion of the visual field with high resolution. This portion, imaged by the fovea in the retina, is focused on an area about the size of a single word at twenty-two inches away. Outside of the fovea, we have very little resolution but exquisite sensitivity to changes in brightness, an ability that allowed our primitive forebears to quickly detect a predator that might be attacking. The constant flicker of a video graphics array (VGA) computer screen is detected by our eyes as motion and causes constant movement of the fovea. This substantially slows down reading speeds, which is one reason that reading on a screen is less pleasant than reading a printed book. This particular issue has been solved with flat-panel displays, which do not flicker.

Other crucial issues include contrast—a good-quality book has an ink-to-paper contrast of about 120:1; typical screens are perhaps half of that—and resolution. Print and illustrations in a book represent a resolution of about 600 to 1000 dots per inch (dpi), while computer screens are about one tenth of that.

The size and weight of computerized devices are approaching those of books, but the devices still are heavier than a paperback book. Paper books also do not run out of battery power.

Most important, there is the matter of the available software, by which I mean the enormous installed base of print books. Fifty thousand new print books are published each year in the United States, and millions of books are already in circulation. There are major efforts under way to scan and digitize print materials, but it will be a long time before the electronic databases have a comparable wealth of material. The biggest obstacle here is the understandable hesitation of publishers to make the electronic versions of their books available, given the devastating effect that illegal file sharing has had on the music-recording industry.

Solutions are emerging to each of these limitations. New, inexpensive display technologies have contrast, resolution, lack of flicker, and viewing angle comparable to high-quality paper documents. Fuel-cell power for

portable electronics is being introduced, which will keep electronic devices powered for hundreds of hours between fuel-cartridge changes. Portable electronic devices are already comparable to the size and weight of a book. The primary issue is going to be finding secure means of making electronic information available. This is a fundamental concern for every level of our economy. Everything—including physical products, once nanotechnology-based manufacturing becomes a reality in about twenty years—is becoming information.