Understanding Computation (26 page)

In

Equivalence

, we saw

that nondeterminism makes no difference to what a finite automaton is capable of,

while

Nonequivalence

showed us that a nondeterministic pushdown automaton

can do more than a deterministic one. That leaves us with an obvious question about Turing

machines: does adding nondeterminism

[

38

]

make a Turing machine more powerful?

In this case the answer is no: a nondeterministic Turing machine

can’t do any more than a deterministic one. Pushdown automata are the

exception here, because both DFAs and DTMs have enough power to simulate

their nondeterministic counterparts. A single state of a finite automaton

can be used to represent a combination of many states, and a single Turing

machine tape can be used to store the contents of many tapes, but a single

pushdown automaton stack can’t represent many possible stacks at

once.

So, just as with finite automata, a deterministic Turing machine can simulate a

nondeterministic one. The simulation works by using the tape to store a queue of suitably

encoded Turing machine configurations, each one containing a possible current state and tape

of the simulated machine. When the simulation starts, there’s only one configuration stored on

the tape, representing the starting configuration of the simulated machine. Each step of the

simulated computation is performed by reading the configuration at the front of the queue,

finding each rule that applies to it, and using that rule to generate a new configuration that

is written onto the tape at the back of the queue. Once this has been done for every

applicable rule, the frontmost configuration is erased and the process starts again with the

next configuration in the queue. The simulated machine step is repeated until the

configuration at the front of the queue represents a machine that has reached an accept

state.

This technique allows a deterministic Turing machine to explore all

possible configurations of a simulated machine in breadth-first order; if

there is any way for the nondeterministic machine to reach an accept

state, the simulation will find it, even if other execution paths lead to

infinite loops. Actually implementing the simulation as a rulebook

requires a lot of detail, so we won’t try to do it here, but the fact that

it’s possible means that we can’t make a Turing machine any more powerful

just by adding nondeterminism.

Deterministic

Turing machines represent a dramatic tipping point from

limited computing machines to full-powered ones. In fact, any attempt to

upgrade the specification of Turing machines to make them more powerful is

doomed to failure, because they’re already capable of

simulating

any potential enhancement.

[

39

]

While adding certain features can make Turing machines

smaller or more efficient, there’s no way to give them fundamentally new

capabilities.

We’ve already seen why this is true for nondeterminism. Let’s look at four other

extensions to conventional Turing machines—internal storage, subroutines, multiple tapes, and

multidimensional tape—and see why none of them provides an increase in computational power.

While some of the simulation techniques involved are complicated, in the end, they’re all just

a matter of programming.

Designing a rulebook for a

Turing machine can be frustrating because of the lack of arbitrary internal

storage. For instance, we often want the machine to move the tape head to a particular

position, read the character that’s stored there, then move to a different part of the tape

and perform some action that depends on which character it read earlier. Superficially, this

seems impossible, because there’s nowhere for the machine to “remember” that character—it’s

still written on the tape, of course, and we can move the head back over there and read it

again whenever we like, but once the head moves away from that square, we can no longer

trigger a rule based on its contents.

It would be more convenient if a Turing machine had some temporary

internal storage—call it “RAM,” “registers,” “local variables,” or

whatever—where it could save the character from the current tape square

and refer back to it later, even after the head has moved to a different

part of the tape entirely. In fact, if a Turing machine had that

capability, we wouldn’t need to limit it to storing characters from the

tape: it could store any relevant information, like the intermediate

result of some calculation the machine is performing, and free us from

the chore of having to move the head around to write scraps of data onto

the tape. This extra flexibility feels like it could give a Turing

machine the ability to perform new kinds of tasks.

Well, as with nondeterminism, adding extra internal storage to a

Turing machine certainly would make certain tasks easier to perform, but

it wouldn’t enable the machine to do anything it can’t already do. The

desire to store intermediate results inside the machine instead of on

the tape is relatively easy to dismiss, because the tape works just fine

for storing that kind of information, even if it takes a while for the

head to move back and forth to access it. But we have to take the

character-remembering point more seriously, because a Turing machine

would be very limited if it couldn’t make use of the contents of a tape

square after moving the head somewhere else.

Fortunately, a Turing machine already has perfectly good internal storage: its

current state. There is no upper limit to the number of states available to a

Turing machine, although for any particular set of rules, that number must be finite and

decided in advance, because there’s no way to create new states during a computation. If

necessary, we can design a machine with a hundred states, or a thousand, or a billion, and

use its current state to retain arbitrary amounts of information from one step to the

next.

This inevitably means duplicating rules to accommodate multiple states whose meanings

are identical except for the information they are “remembering.” Instead of having a single

state that means “scan right looking for a blank square,” a machine can have one state for

“scan right looking for a blank square (remembering that I read anaearlier),” another for “scan right looking for a blank square (remembering

that I read abearlier),” and so on for all possible

characters—although the number of characters is finite too, so this duplication always has a

limit.

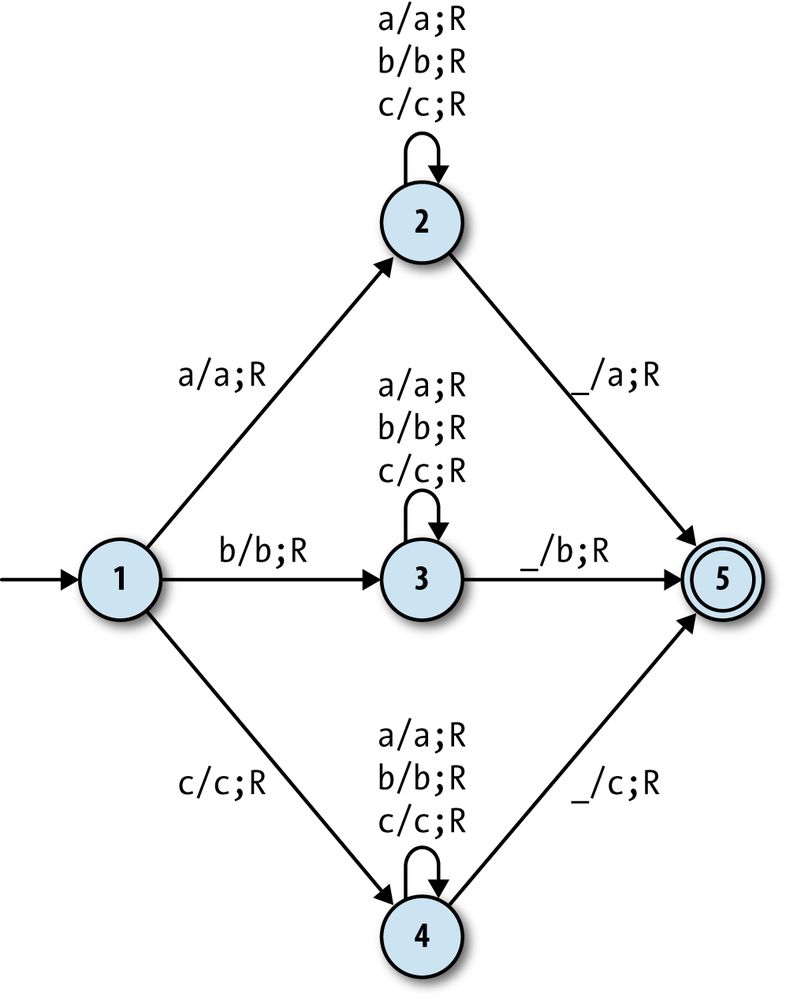

Here’s a simple Turing machine that uses this technique to copy a

character from the beginning of a string to the end:

>>rulebook=DTMRulebook.new([# state 1: read the first character from the tapeTMRule.new(1,'a',2,'a',:right),# remember aTMRule.new(1,'b',3,'b',:right),# remember bTMRule.new(1,'c',4,'c',:right),# remember c# state 2: scan right looking for end of string (remembering a)TMRule.new(2,'a',2,'a',:right),# skip aTMRule.new(2,'b',2,'b',:right),# skip bTMRule.new(2,'c',2,'c',:right),# skip cTMRule.new(2,'_',5,'a',:right),# find blank, write a# state 3: scan right looking for end of string (remembering b)TMRule.new(3,'a',3,'a',:right),# skip aTMRule.new(3,'b',3,'b',:right),# skip bTMRule.new(3,'c',3,'c',:right),# skip cTMRule.new(3,'_',5,'b',:right),# find blank, write b# state 4: scan right looking for end of string (remembering c)TMRule.new(4,'a',4,'a',:right),# skip aTMRule.new(4,'b',4,'b',:right),# skip bTMRule.new(4,'c',4,'c',:right),# skip cTMRule.new(4,'_',5,'c',:right)# find blank, write c])=> #>>tape=Tape.new([],'b',['c','b','c','a'],'_')=> #>>dtm=DTM.new(TMConfiguration.new(1,tape),[5],rulebook)=> #>>dtm.run;dtm.current_configuration.tape=> #

States 2, 3, and 4 of this machine are almost identical, except they each represent a

machine that is remembering a different character from the beginning of the string, and in

this case, they all do something different when they reach the end.

The machine only works for strings made up of the charactersa,b, andc;

if we wanted it to work for strings containing

any

alphabetic characters (or alphanumeric

characters, or whatever larger set we chose), we’d have to add a lot

more states—one for each character that might need to be

remembered—and a lot more rules to go with them.

Exploiting the current state in this way allows us to design

Turing machines that can remember any finite combination of facts while

the tape head moves back and forth, effectively giving us the same

capabilities as a machine with explicit “registers” for internal

storage, at the expense of using a large number of

states.

A Turing machine’s

rulebook is a

long, hardcoded list of extremely low-level instructions, and it can be

difficult to write these rules without losing sight of the high-level task that the machine

is meant to perform. Designing a rulebook would be easier if there was a way of calling a

subroutine

: if some part of the machine could store all the rules

for, say, incrementing a number, then our rulebook could just say “now increment a number”

instead of having to manually string together the instructions to make that happen. And

again, perhaps that extra flexibility would allow us to design machines with new

capabilities.

But this is another feature that is really just about convenience,

not overall power. Just like the finite automata that implement

individual fragments of a regular expression (see

Semantics

), several small Turing

machines can be connected together to make a larger one, with each small

machine effectively acting as a subroutine. The binary-increment machine

we saw earlier can have its states and rules built into a larger machine

that adds two binary numbers together, and that adder can itself be

built into an even larger machine that performs multiplication.

When the smaller machine only needs to be “called” from a single

state of the larger one, this is easy to arrange: just include a copy of

the smaller machine, merging its start and accept states with the states

of the larger machine where the subroutine call should begin and end.

This is how we’d expect to use the incrementing machine as part of an

adding machine, because the overall design of the rulebook would be to

repeat the single task “if the first number isn’t zero, decrement the

first number and increment the second number” as many times as possible.

There’d only be one place in the machine where incrementing would need

to happen, and only one place for execution to continue after the

incrementing work had completed.

The only difficulty comes when we want to call a particular subroutine from more than

one place in the overall machine. A Turing machine has no way to store a “return address” to

let the subroutine know which state to move back into once it has finished, so

superficially, we can’t support this more general kind of code reuse. But we can solve this

problem with duplication, just like we did in

Internal Storage

: rather

than incorporating a single copy of the smaller machine’s states and rules, we can build in

many copies, one for each place where it needs to be used in the larger machine.

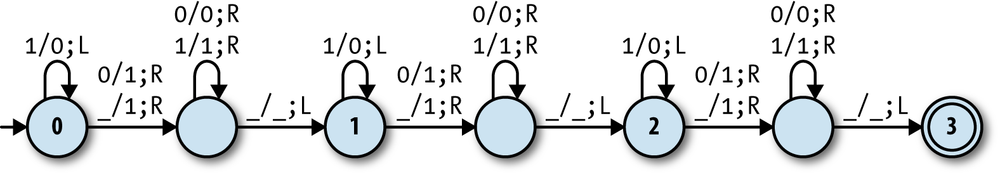

For example, the easiest way to turn the “increment a number” machine into an “add three

to a number” machine is to connect three copies together to achieve an overall design of

“increment the number, then increment the number, then increment the number.” This takes the

larger machine through several intermediate states that track its progress toward the final

goal, with each use of “increment the number” originating from and returning to a different

intermediate state:

>>defincrement_rules(start_state,return_state)incrementing=start_statefinishing=Object.newfinished=return_state[TMRule.new(incrementing,'0',finishing,'1',:right),TMRule.new(incrementing,'1',incrementing,'0',:left),TMRule.new(incrementing,'_',finishing,'1',:right),TMRule.new(finishing,'0',finishing,'0',:right),TMRule.new(finishing,'1',finishing,'1',:right),TMRule.new(finishing,'_',finished,'_',:left)]end=> nil>>added_zero,added_one,added_two,added_three=0,1,2,3=> [0, 1, 2, 3]>>rulebook=DTMRulebook.new(increment_rules(added_zero,added_one)+increment_rules(added_one,added_two)+increment_rules(added_two,added_three))=> #>>rulebook.rules.length=> 18>>tape=Tape.new(['1','0','1'],'1',[],'_')=> #>>dtm=DTM.new(TMConfiguration.new(added_zero,tape),[added_three],rulebook)=> #>>dtm.run;dtm.current_configuration.tape=> #

The ability to compose states and rules in this way allows us to

build Turing machine rulebooks of arbitrary size and complexity, without

needing any explicit support for subroutines, as long as we’re prepared

to accept the increase in machine size.